Whisper is automatic speech recognition (ASR) system that can understand multiple languages. It has been trained on 680,000 hours of supervised data collected from the web.

Whisper is developed by OpenAI. It’s free and open source.

Speech processing is a critical component of many modern applications, from voice-activated assistants to automated customer service systems. This tool will make it easier than ever to transcribe and translate speeches, making them more accessible to a wider audience. OpenAI hopes that by open-sourcing their models and code, others will be able to build upon their work to create even more powerful applications.

Whisper can handle transcription in multiple languages, and it can also translate those languages into English.

It will also be used by commercial software developers who want to add speech recognition capabilities to their products. This will help them save a lot of money since they won’t have to pay for a commercial speech recognition tool.

I think this tool is going to be very popular, and I think it has a lot of potential.

In this tutorial, we’ll get started using Whisper in Google Colab. We’ll quickly install it, and then we’ll run it with one line to transcribe an mp3 file. We won’t go in-depth, and we want to just test it out to see what it can do.

Table of Contents

Quick Video Demo

This is a short demo showing how we’ll use Whisper in this tutorial.

Sidenote: AI art tools are developing so fast it’s hard to keep up.

We set up a newsletter called tl;dr AI News.

In this newsletter we distill the information that’s most valuable to you into a quick read to save you time. We cover the latest news and tutorials in the AI art world on a daily basis, so that you can stay up-to-date with the latest developments.

Check tl;dr AI NewsUsing Whisper For Speech Recognition Using Google Colab

[powerkit_alert type=”info” dismissible=”false” multiline=”false”]Google Colab is a cloud-based service that allows users to write and execute code in a web browser. Essentially Google Colab is like Google Docs, but for coding in Python.

You can use Google Colab on any device, and you don’t have to download anything. For a quick beginner friendly intro, feel free to check out our tutorial on Google Colab to get comfortable with it.[/powerkit_alert]

If you don’t have a powerful computer or don’t have experience with Python, using Whisper on Google Colab will be much faster and hassle free. For example, on my computer (CPU I7-7700k/GPU 1660 SUPER) I’m transcribing 30s in a few minutes, whereas on Google Colab it’s a few seconds.

Open a Google Colab Notebook

First, we’ll need to open a Colab Notebook. To do that, you can just visit this link https://colab.research.google.com/#create=true and Google will generate a new Colab notebook for you.

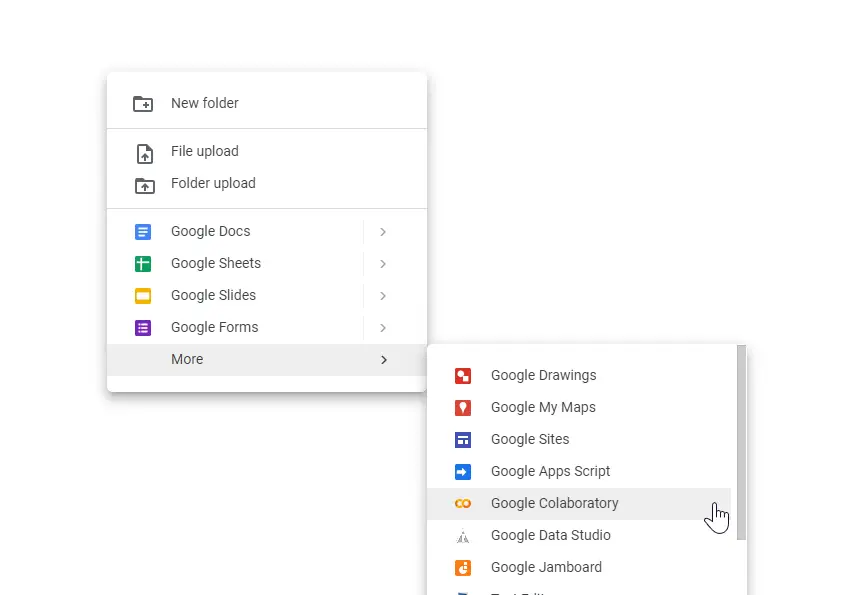

Alternatively, you can go anywhere in your Google Drive > Right Click (in an empty space like you want to create a new file) > More > Google Colaboratory. A new tab will open with your new notebook. It’s called Untitled.ipynb but you can rename it anything you want.

Enable GPU

Next, we want to make sure our notebook is using a GPU. Google often allocates us a GPU by default, but not always.

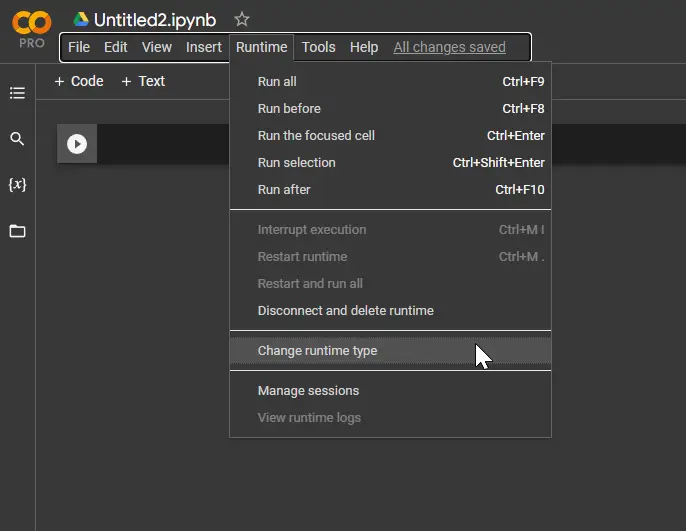

To do this, in our Google Colab menu, go to Runtime > Change runtime type.

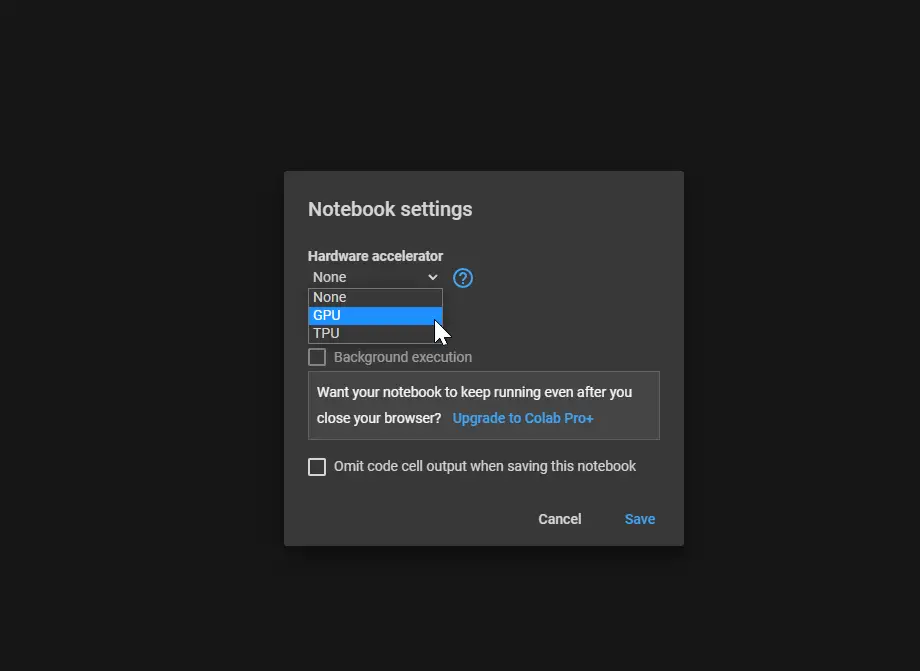

Next, a small window will pop up. Under Hardware accelerator there’s a dropdown. Make sure GPU is selected and click Save.

Install Whisper

Now we can install Whisper. (You can also check install instructions in the official Github repository).

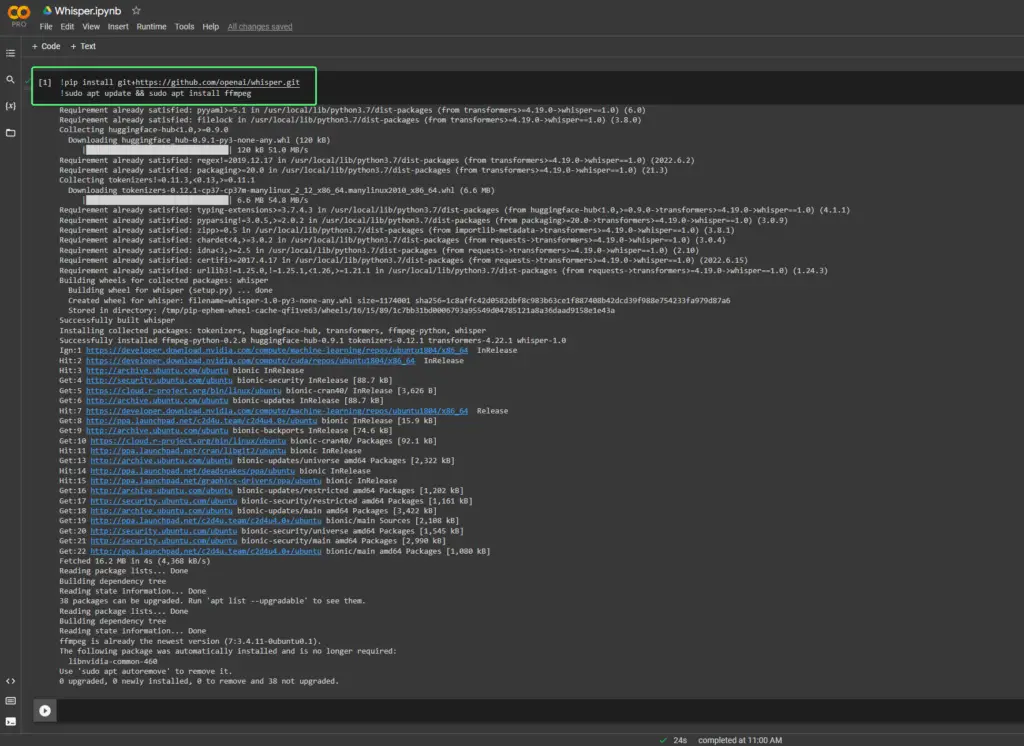

To install it, just paste the following lines in a cell. To run the commands, click the play button at the left of the cell or press Ctrl + Enter. The install process should take 1-2 minutes.

!pip install git+https://github.com/openai/whisper.git !sudo apt update && sudo apt install ffmpeg

[powerkit_alert type=”info” dismissible=”false” multiline=”false”]

Note: We’re prefixing every command with ! because that’s how Google Colabs works when using shell scripts instead of Python. If you’re using Whisper on your computer, in a terminal, then don’t use the ! at the beginning of the line.

[/powerkit_alert]

Upload an Audio File

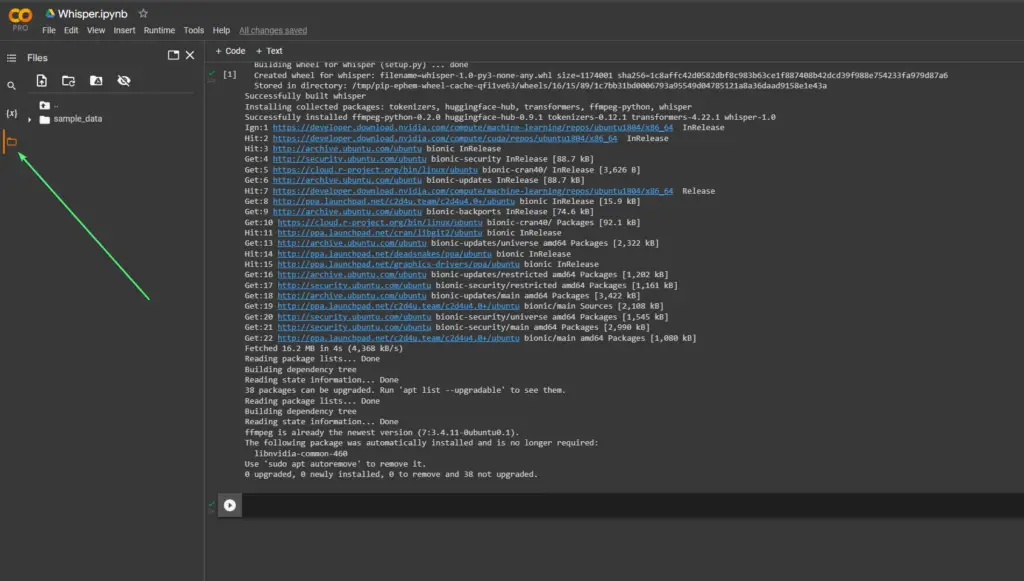

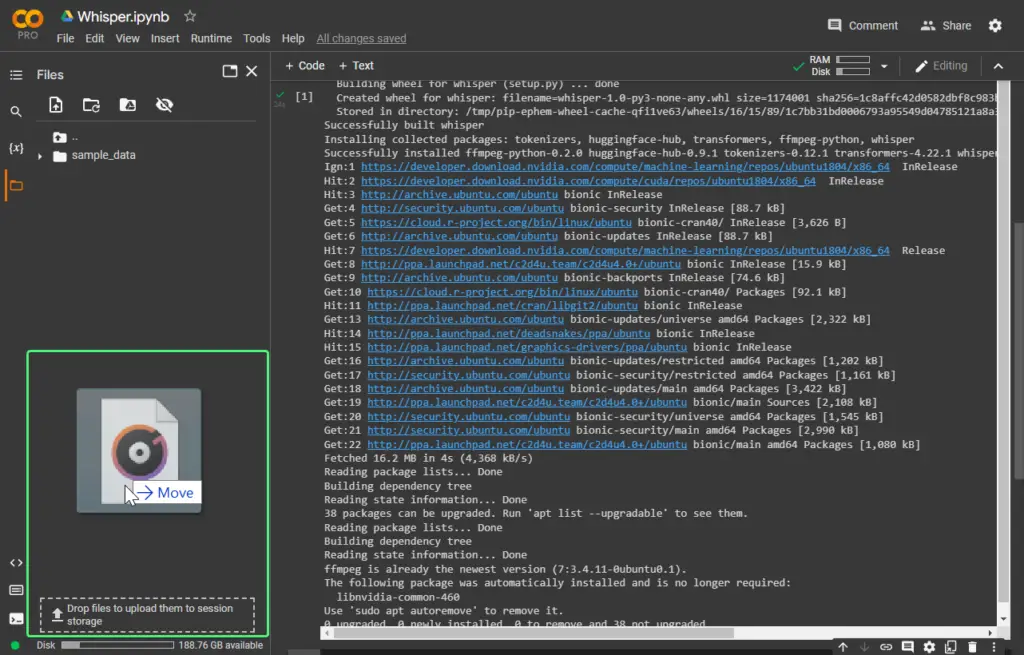

Now, we can upload a file to transcribe it. To do this, open the File Browser at the left of the notebook by pressing the folder icon.

Now you can press the upload file button at the top of the file browser, or just drag and drop a file from your computer and wait for it to finish uploading.

Run Whisper to Transcribe Speech to Text

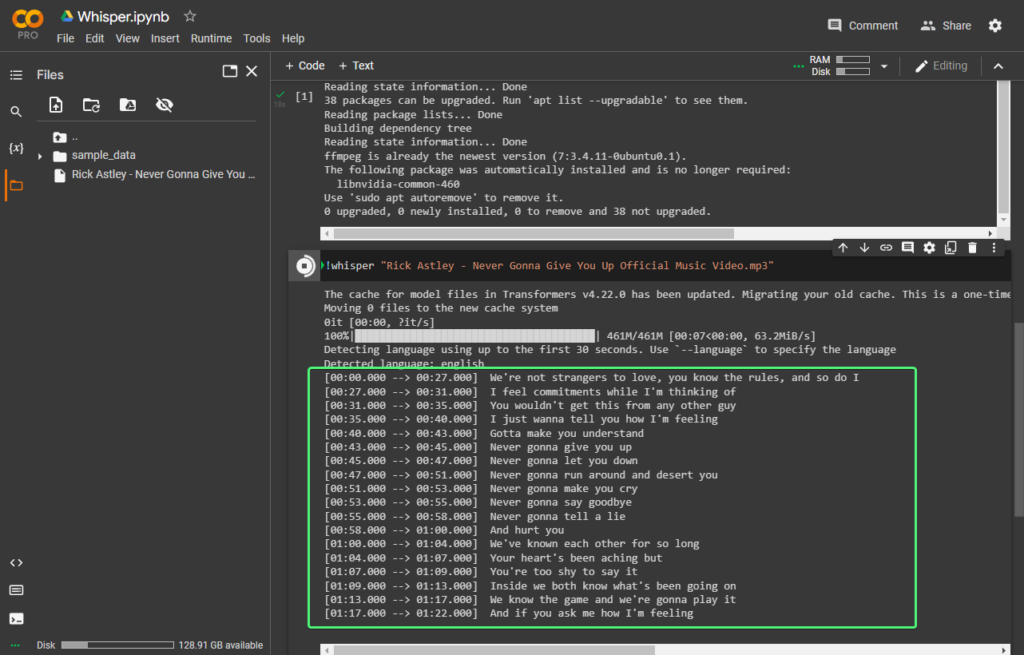

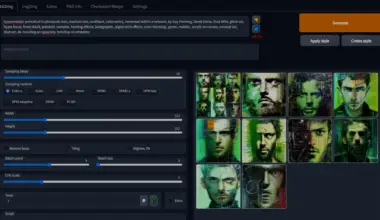

Next, we can simply run Whisper to transcribe the audio file using the following command. If this is the first time you’re running Whisper, it will first download some dependencies.

!whisper "Rick Astley - Never Gonna Give You Up Official Music Video.mp3"

In less than a minute, it should start transcribing.

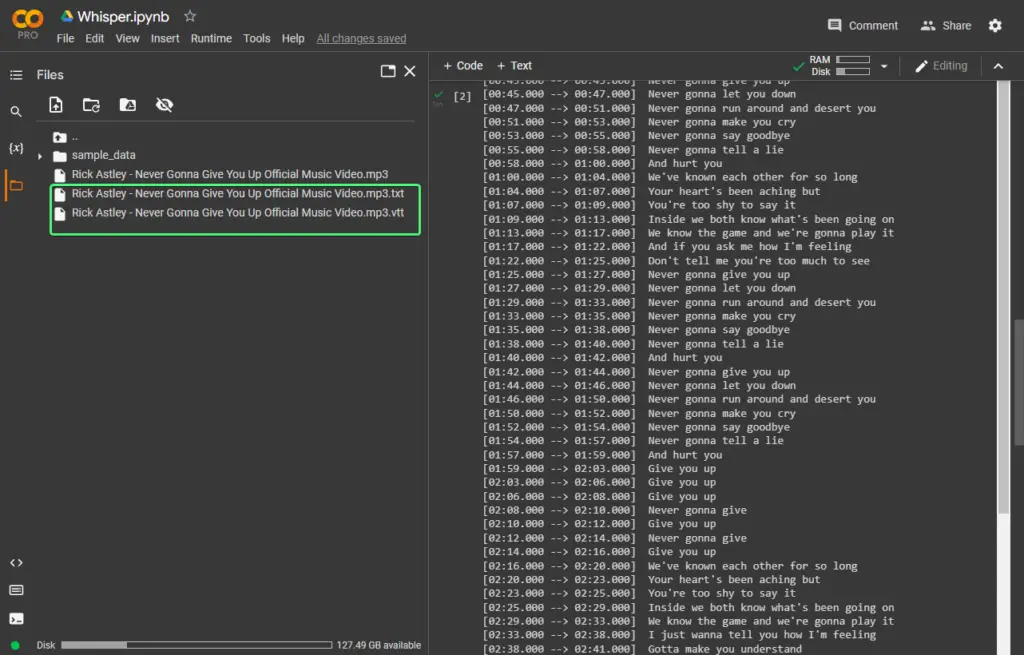

When it’s finished, you can find the transcription files in the same directory in the file browser:

Using Whisper Models

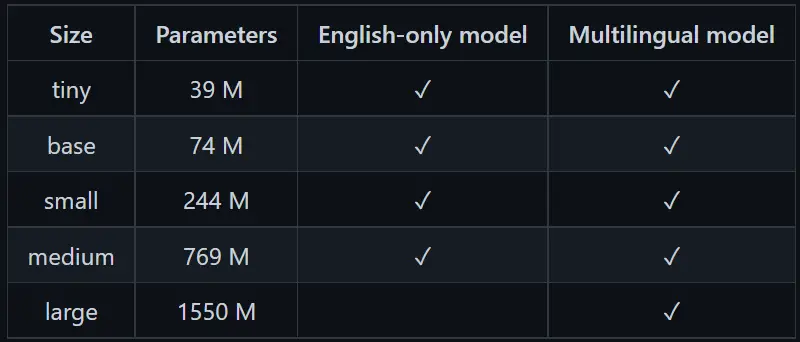

Whisper comes with multiple models. You can read more about Whisper’s models here.

By default, it uses the small model. It’s faster but not as accurate as a larger model. For example, let’s use the medium model.

We can do this by running the command:

!whisper AUDI_FILE --model medium

In my case:

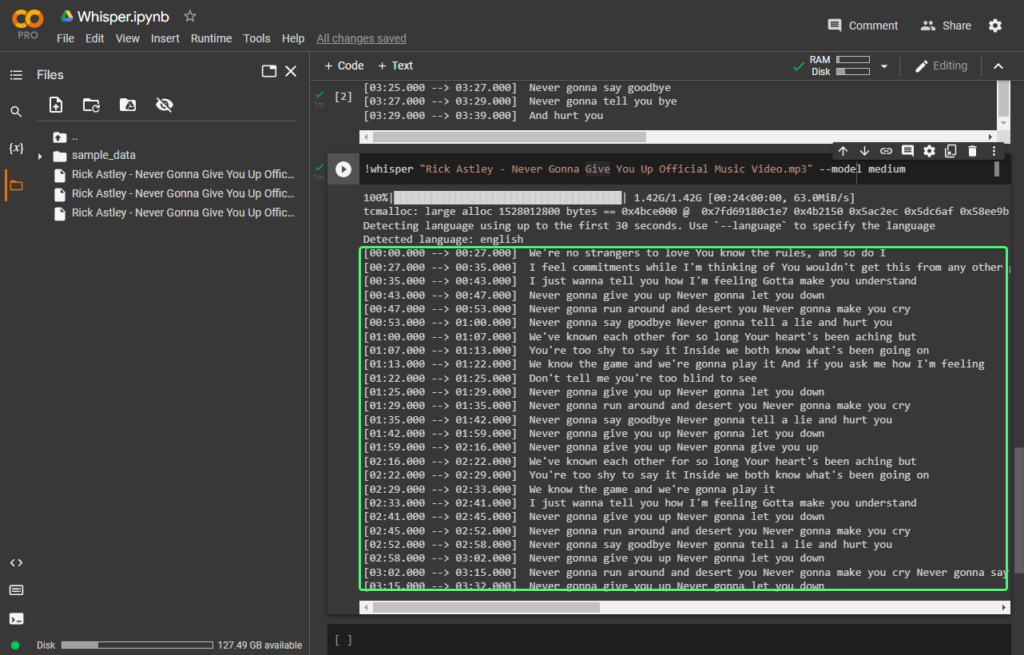

!whisper "Rick Astley - Never Gonna Give You Up Official Music Video.mp3" --model medium

The result is more accurate when using the medium model than the small one.

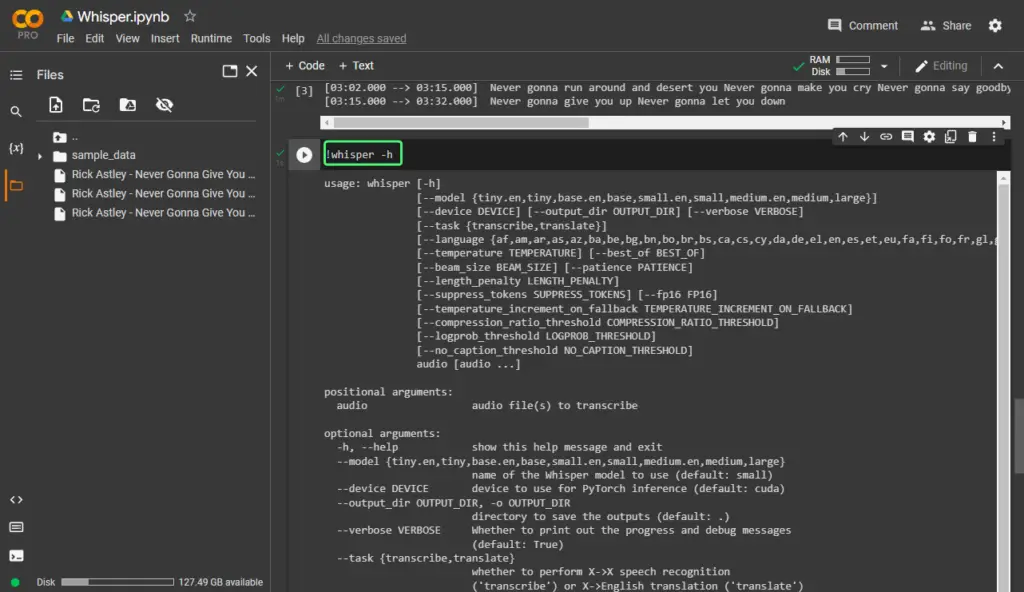

Whisper Command-Line Options

You can check out all the options you can use in the command-line for Whisper by running !whisper -h in Google Colab:

Conclusion

In this tutorial, we covered the basic usage of Whisper by running it via the command-line in Google Colab. This tutorial was meant for us to just to get started and see how OpenAI’s Whisper performs.

You can easily use Whisper from the command-line or in Python, as you’ve probably seen from the Github repository. We’ll most likely see some amazing apps pop up that use Whisper under the hood in the near future.

Useful Resources & Acknowledgements

- The GitHub Repository for Whisper – https://github.com/openai/whisper. It has useful information on Whisper, as well as some nice examples of using Whisper from the command-line, or in Python.

- OpenAI Whisper – MultiLingual AI Speech Recognition Live App Tutorial – https://www.youtube.com/watch?v=ywIyc8l1K1Q. A very useful intro to Whisper, as well as a great demo on how to use it with a simple Web UI using Gradio.

- Hacker News Thread – https://news.ycombinator.com/item?id=32927360. You can find some great insights in the comments.

worked great – THANK YOU !!

I’m using this to transcribe voice audio files from clients… super helpful.

Hi! Thanks for commenting! Glad to help! I’m happy you found it useful!

Great tip to use it on Colab instead of locally. WAY faster.

Hi! Thank you! Glad to be of service!

What’s the best way to use it for long transcriptions? Say 1-2 hours?

I’m using it to do 2-3 hour files and its working great.

Thank you!! Very helpful for my 8-mins talk.

Hi! Glad to help! Also thanks for the feedback. It is very much appreciated!

I tried several files and they kept erroring out and follow this to a t.

channel element 0.0 is not allocated

Does not work errors everywhere dvck all geeks.

Hi, Ally. Thanks for commenting. Would you mind sharing a screenshot with the errors you’re getting? I just tried it now to make sure there haven’t been any updates on their end to cause errors.

Don’t know what she’s talking about. Still works great. This is fantastic.

Nice! Thanks for commenting! I’m happy to hear!

what is the progress bar indicating?

when I use it on linux machine I get “FP16 is not supported on cpu using FP32 instead” what does this mean? is there a way to speed up the transcription? Thank you.

Hi, thanks for commenting. I believe it needs a GPU to speed up the transcription, and because it wasn’t able to use one it used your CPU, which is slower.

The message “FP16 is not supported on CPU, using FP32 instead” means that the hardware isn’t capable of performing the quicker, but less precise, FP16 computations, so it’s defaulting to the slower, but more precise, FP32 calculations. This could slow down the transcription.

For it to be faster you’d need a good Nvidia GPU, with CUDA toolkit installed, which is a software that allows you to use your GPU for this type of task.

Hope that helps. Let me know if there’s anything I can help with. Thank you!

Omg, this way faster then doing it locally. It helped me a lot, and it’s very easy to use. Thank you very much!!!

Hi, Mustafa. Glad it helped and thanks for commenting and letting us know! It’s very much appreciated!

I have material in m3u8 format. How can I make transcriptions? This guide doesn’t work

Hi Parker. I believe

m3u8files that only contain the location of the actual videos. So they’re like files containing a playlist.I’m not sure where you got it from, but if it’s from a website where there was an embedded video you can use https://www.downloadhelper.net/ to reliable download the actual videos.

Hope this helps. I’m aware it may be confusing. I was confused the first time when dealing with

m3u8files.this comes from the chrome extension Twitch vod downloader sample m3u8: https://dgeft87wbj63p.cloudfront.net/668f26476ac0d6233d08_demonzz1_40330491285_1703955163/chunked/index-dvr.m3u8

I am looking for a program that will quickly transcribe broadcast recordings from Twitch and I will not have to download them due to the slow Internet. I am looking for a program that will transcribe 4 hours of vod up to a maximum of 30 minutes. Do you have any idea?

I haven’t tried it out but I’m thinking this might work https://github.com/collabora/WhisperLive

It seems it also comes with a Chrome or Firefox extension which captures the audio from your current tab and transcribes it.

It looks pretty cool.

I don’t know if I’ll be able to try it out myself anytime soon, however, do to other work I am involved in at the moment.

I hope it helps.

Hi. This tutorial always worked perfect for me since I found it and recommended it to everyone. I am a journalist and in my profession it is a wonderful thing. But since some time ago there was an update that makes a lot of nVidia dependencies to load, and the two minutes that Whisper took before to be ready went to almost twenty…

Cheers!

Hearing this made my day. I’m really happy it helps! Thanks so much for the kind words, Marcelo. I very much appreciate it!

Thanks to you! In fact, I adapted what you wrote and made a tutorial in Spanish for my co-workers.