In this article, we will review and rank the available services of multiple cloud GPU platforms based on their capability, ease of use, pricing, and other noticeable attributes, creating convenience for you, the reader.

Introduction

Whether you are a data scientist or a machine learning enthusiast, the need for capable and fast hardware is real because your task requires your hardware to be able to handle enormous loads of processing at an acceptable speed.

However, you are unsure how to proceed because you may currently not require a GPU long-term, or perhaps the budget just isn’t there, or you just can’t be bothered to deal with the hassle of installing new hardware.

So you turn to cloud GPU platforms. Cheap, easy, and incredibly effective platforms for your virtual GPU needs. You may have heard about these services, yet you are still unsure of where to begin and which service you should invest your time and money in.

In this article, we’ll simplify the process for you by reviewing and summarizing what each cloud GPU service has to offer so that you can make an informed decision.

What Is the Best Cloud GPU for Deep Learning? Overall Recommendations.

Special Mentions

- Google Colab – Platform that is free, popular with a powerful API, perfect for your on-the-go work.

- Kaggle – Free, community-driven, resource-rich platform for all data scientists offering free GPUs.

Best Cloud GPU Providers

- Paperspace – Free, scalable, and perfect for beginners and teams. No servers in Asia.

- Google Cloud Platform – Extremely robust platform with high-entry barrier. High performance and global servers.

- AWS EC2 – One of the most popular, top-of-the-line, robust platforms available with high-entry barrier.

- Azure (Azure N series) – Top competitor in the industry-level cloud GPU platforms, Azure has a high entry barrier but extremely flexible and capable.

- Coreweave – USA only servers but at the highest level of performance, variety and convenience.

- Linode – Cloud GPU platform perfect for developers.

- Tencent Cloud – If you need a server located in Asia (or globally) for an affordable price, Tencent is the way to go.

- Lambda Labs – Specifically oriented towards data science and ML, this platform is perfect for those involved in professional data handling.

- Genesis Cloud – Renewable energy based, relatively cheap yet quite capable platform with servers located mainly in Europe.

- OVH Cloud – IaaS offered by the biggest cloud hosting provider in Europe. Highly reliable and secure.

- Vultr – vGPUs offered at an affordable price with global infrastructure.

- JarvisLabs – Oriented towards beginners and students yet scalable given demand. Only servers are located in India.

- Vast AI – vGPUS offered at an affordable rate. Vast.ai’s hosting system is innovative as it based on the community hosts rather than fixed data centers.

- Runpod.io – Extremely affordable rates and wide variety offered for cloud GPUs. Servers are hosted both by the community and by the fixed data centers offered by Runpod.

- Banana.dev – ML focused small-scale start up with serverless hosting. Easily integrated with popular ML models such as Stable Diffusion.

- Fluidstack – The Airbnb of GPU computation.

- IBM Cloud – Industry grade IT platform that offers secure, robust GPU compute with integrated global network.

- Brev – If you are a software engineer looking for a customized environment with a simple interface specialized towards your task or a data scientist looking for instances with model integration, Brev is the deal.

What Is a GPU, and Why Do I Need One?

GPUs (Graphics Processing Units), similar yet distinctly different from CPUs, are able to handle massive amounts of data processing via parallel processing and higher memory bandwidth.

Rendering graphics, especially in games such as shading and 3D real-time imaging, requires large amounts of real-time processing to be smooth.

Additionally, this capability is also used in cryptocurrency mining due to the similar nature of the workloads.

CPUs can handle most processing tasks, but it has difficulties handling larger volumes of similar tasks, which GPUs are able to handle due to their ability to process the data simultaneously.

Similarly, deep learning processes are also handled efficiently by GPUs due to the massive amounts of computation required in the training phase. Training involves matrix operations juggling factor weights, tensors, and multiple layers, resulting in a large-scale network and these operations require handling by a GPU.

So Why a Cloud GPU Platform?

Assuming you’re now using a laptop or a computer, a CPU comes built into the product it since it’s the core of the device.

Some modern computers/laptops already come with a built-in GPU nowadays, but quite often, you may need a more powerful GPU than you currently own to handle heavier deep learning tasks.

When you depend only on your computer’s GPU for deep learning, you may run into some of the following situations:

- Limited GPU Power: Your computer’s GPU may not be as powerful as a cloud GPU, which may be a problem if you need to perform deep learning tasks that require more resources, such as when you’re training larger deep learning models.

- Heat & Noise: GPUs can generate a lot of heat and noise, which can become a problem if you’re working in a small space or if you can’t produce a lot of noise in your workspace due to other people around you or if you’re sensitive to noise.

- Cost: Upgrading or replacing a computer’s GPU can get expensive, especially if you need the latest generation GPUs.

- Compatibility: Depending on your current computer, whether it’s older or depending on its’ other components, it’s possible that if you get the latest and greatest GPU right now, it may not be compatible, so you may need to also upgrade your computer, which adds further to the cost.

- Upgrade Cycle: If you depend on your computer’s GPU, you may need to upgrade it every few years when better GPUs are released. This can be inconvenient and costly. Albeit, this depends on the deep learning tasks you work with.

- Limited Availability: You only have access to the GPUs in your computer (whether one or multiple). If you use your GPU for tasks that require a lot of processing power, there’s the possibility that it won’t always be available when you need it. For example, if you use your computer for multiple tasks at the same time, it’s possible that your GPU won’t have enough resources to handle all of them.

In contrast, online cloud NVIDIA GPU instances provided by cloud GPU services eliminate all the hassle and come at a much cheaper price on demand.

- Increased GPU power: Cloud GPUs are usually more powerful than our computer’s GPU, which is very useful if you need to perform deep learning tasks that require a lot of resources, such as training large deep learning models.

- No worrying about heat or noise: Cloud GPUs are typically located in remote data centers, which are managed by professional tech teams who ensure the best conditions are met for the GPUs to function properly 24/7, so you don’t need to worry about heat or noise.

- Cost Efficiency: Renting a powerful cloud GPU can be much more cost-efficient than buying because renting a cloud GPU allows you to pay only for the time you use it while buying a powerful GPU for your computer requires a large upfront cost. Say you only need a powerful GPU for a few hours a week; renting a cloud GPU would be much more cost-efficient than buying a powerful GPU for your computer.

- Compatibility: Cloud GPUs are usually updated and compatible with the latest software and hardware, so you won’t need to worry about compatibility issues.

- Flexibility: With a cloud GPU provider, you can easily scale up or down, depending on your requirements, which can be very useful if you have fluctuating workloads.

- Availability: Cloud GPUs are always available when you need them, and the cloud GPU platform ensures that you have multiple GPU models to choose from.

- Ease of Use: Cloud GPU providers usually offer an easy-to-use interface with preconfigured environments, which can save you time and effort.

There are various benefits to using cloud GPU instances. Multiple services offer such instances, and choosing one depends on the individual or organization’s needs. For this reason, it is important to research the options and determine which one is the best fit.

In this article, we will discuss multiple cloud GPU providers so that you can determine which one is the best fit for you.

Special Mentions

If you are a beginner or a DS enthusiast and do not have or are not willing to provide budget for cloud GPUs intended for what can be described as temporary exploration of Deep Learning, then you are in luck because Google also provides Kaggle Notebooks and Google Colab Notebooks which are all virtual JupyterLab (at least a lightweight version of it) notebooks with the capacity to run with a free GPU (including Tesla P100) for a limited duration of time.

Without the need for setup, since the notebooks come with pre-installed data science libraries such as scikit-learn, PyTorch, et cetera, these platforms are perfect for exploratory and lightweight, on-the-go data science work.

1. Kaggle (highly recommended for those who want a quick start and beginners)

- Free GPU/TPU (Tesla P100)

- Perfect for beginners

- Supports R

- No setup is required at all

- Lightweight

- Quotas for GPU usage

- Entire courses, competitions, community

- Integrated JupyterLab notebooks

- Simple and easy to understand

- Can be utilized whenever, wherever

Kaggle is one of the largest public dataset and data science/machine learning competition platform/discussion forum for those involved in the subject field. They are one of the most integral parts of data scientists’ learning/career due to the presence of ML/DL community, massive amounts of datasets, competitions and free, open-source projects and notebooks.

Why is Kaggle special?

This beautiful gift to data science was first founded by Anthony Goldbloom in 2010 and later acquired by Google in 2017.

The website offers a considerably substantial amount of free “knowledge,” ranging from 50,000 public datasets to 450,000 public notebooks which have probably increased by a large amount now in 2023.

If you dabble in deep learning, you probably have heard of Jeremy Howard, the co-founder of fast.ai, which is a library for deep learning that has been continually praised for its efficiency and simplicity.

Jeremy Howard is also a chief scientist at Kaggle.

One of the best features Kaggle offers is their scoring system which will essentially quantify your progress as a data scientist if you’re just starting out.

Additionally, besides the countless free resources, Kaggle also holds public competitions with either Kaggle score or monetary prizes that are in the thousands of dollars.

For a beginner deep learning enthusiast, their reinforcement learning, neural network, machine learning courses are crucial due to their simplicity and eloquence.

And the scoring system helps you track progress with real, observable numbers.

Moreover, on the GPU side of things, Kaggle offers free notebook IDEs with possibility for GPU integration for free.

You are given free reign to use the GPU actively for a maximum of 30 hours per week. The GPU itself is an NVIDIA Tesla P100 GPU with 16GB memory.

The service is entirely free with no options for payment.

“I want to use Kaggle’s notebooks then, where do I begin?”

1. Go to Kaggle.com

2. If you have an account, login and if not, register a new account for free.

3. Once you’ve landed on the homepage, click on the “Create” button and select from the drop-down list “New Notebook”.

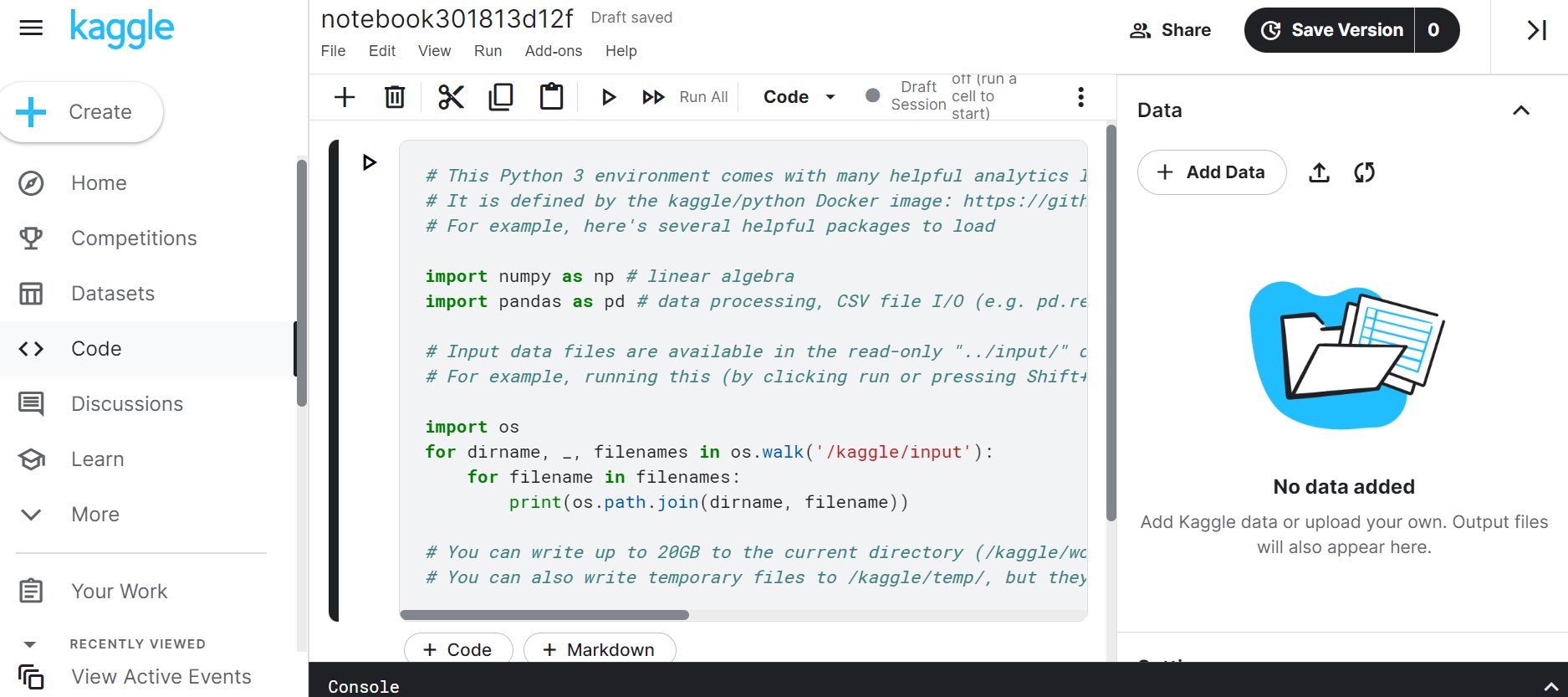

4. You will land on the newly created notebook which is a Jupyter environment, the default language being Python. You can change the language to R, optionally.

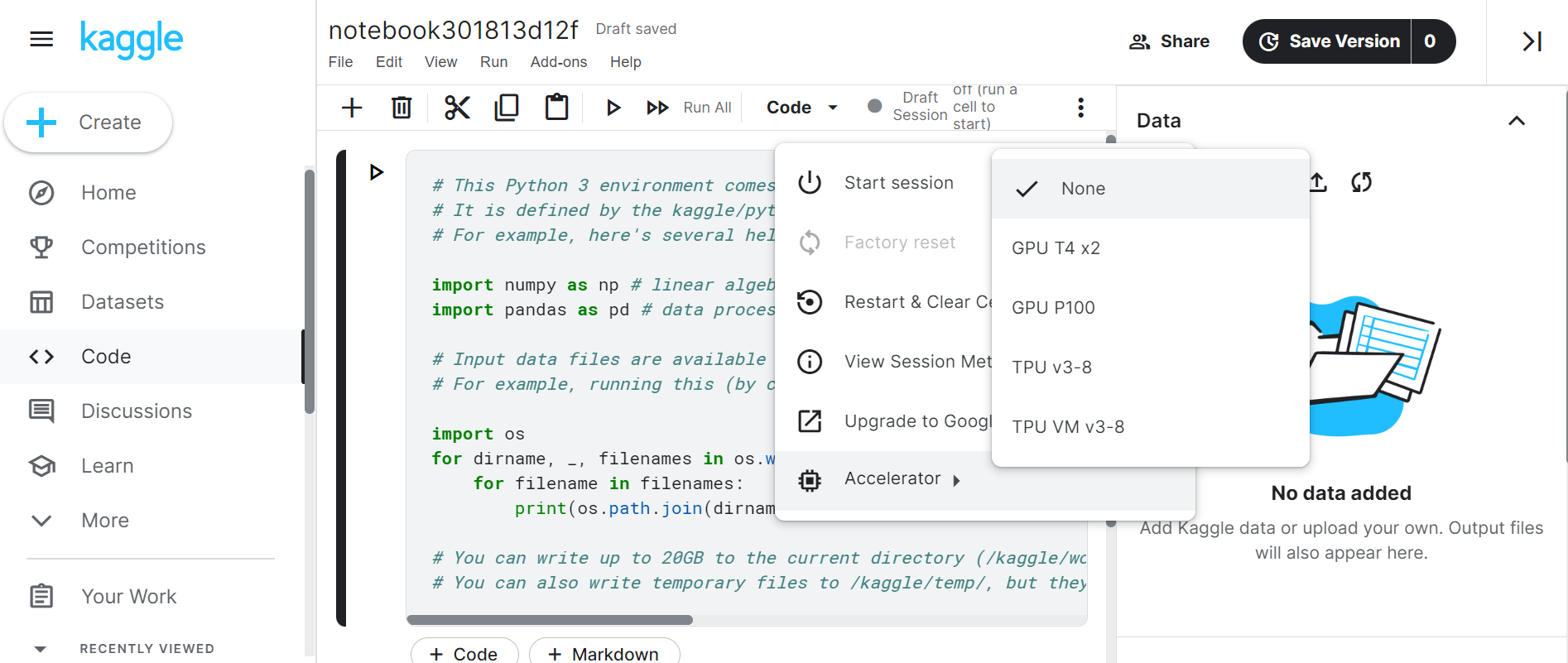

5. Click on the vertical ellipsis which is the ⋮ symbol. You will be able to navigate to “Accelerator” tab, and open a list of accelerators available.

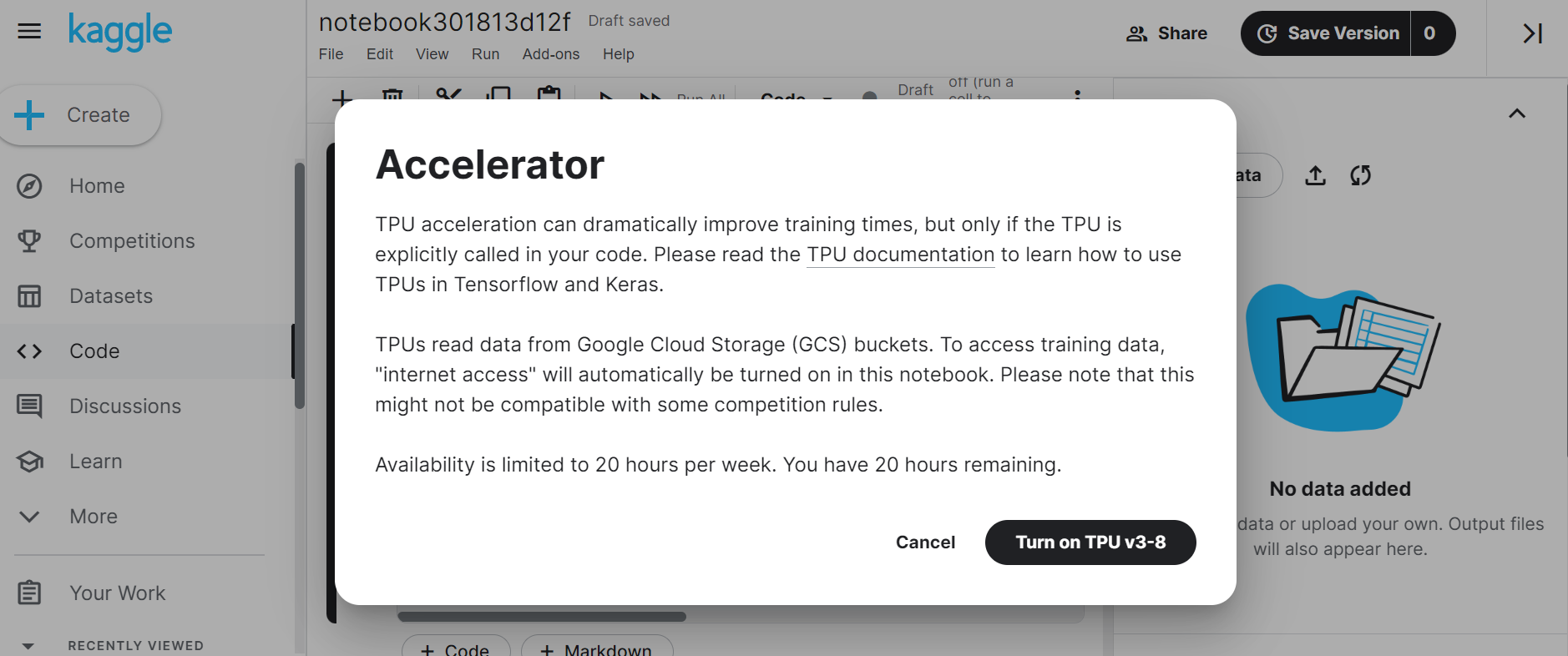

6. Choose whichever one you prefer and you will be shown the use cases as well as remaining hours you have left for the week using the GPU.

And there you have it. Your Kaggle notebook is now integrated with an NVIDIA Tesla P100 GPU 16GB, with which you will be able to do “most” of your personal work. Unless the load is at an industrial level, this GPU usage is usually enough to get just about any task done.

2. Google Colab (highly recommended for jump starting)

- Widely known, lightweight JupyterLab with pre-installed data science related libraries

- Free GPU and TPU

- No setup required

- Supports Swift

- Integrated with Google Drive

- Powerful API

- Clean and simple user interface

- NVIDIA T4, NVIDIA V100, NVIDIA A100 GPUs offered for free

- GPU usage limit

Google Colab is a widely known digital IDE for data scientists that are looking for a quick data science processing environment without any setup and all the tools that are present in the standard JupyterLab. Since it is a direct product of Google, the interface is integrated with Google Drive.

Now, if we recall that time Google invented TensorFlow and now observe how crucial it has become to deep learning in general, we can safely bet that their products involving data science and AI will be just as, if not better in terms of usability and convenience.

Google Colab is the ultimate “pocket” Jupyter on the web, as it offers essentially everything a notebook can and more for 0 cost.

For beginners and those who want a jumpstart, this service is perfect due to all the libraries you require for simple data science tasks being pre-installed and with possibilities to integrate PyTorch, KERAS, OpenCV, TensorFlow et cetera.

“Alright, I like the sound of that. How do I get a GPU there?”

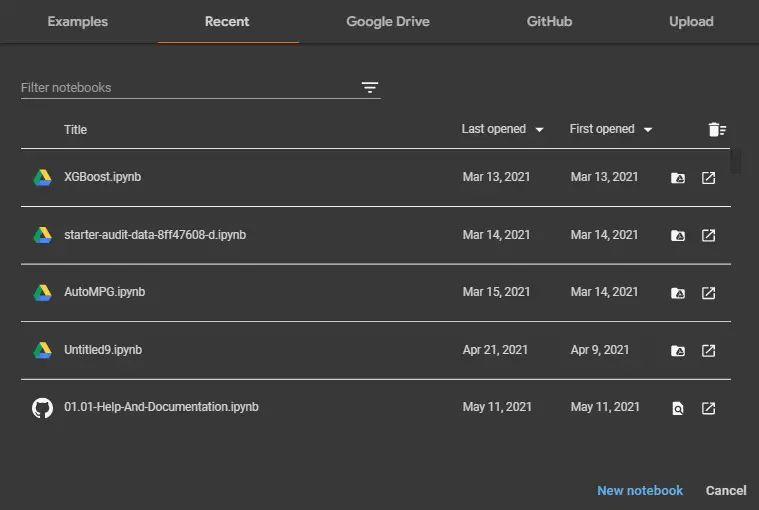

1. Go to Google Colab

2. Register or proceed with pre-existing account

3. Click on ‘New notebook’

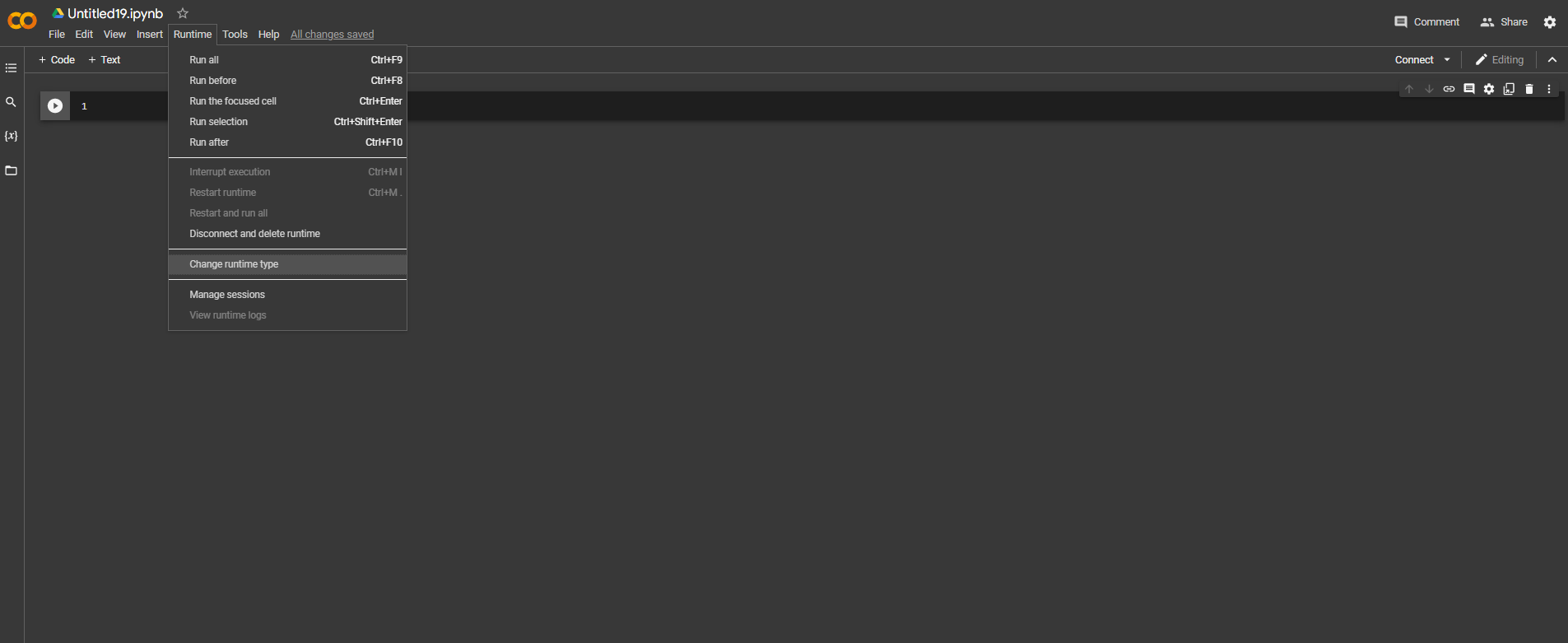

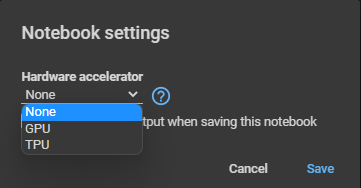

4. Once created, from the toolbar section, click on ‘Runetime’ and from the drop-down list, ‘Change runtime type’

5. Just pick and choose either a GPU or a TPU and Colab will get your accelerator randomly from their available resources.

And there you have it. You will be able to use either the TPU or the GPU for 12 hours for free at max and afterwards, you have to wait 12 hours to continue your active usage.

To check which model you got, since Colab handles bash commands directly from notebook, insert into and execute a cell on the notebook with the following command

!nvidia-smi

And find out whether you got lucky!

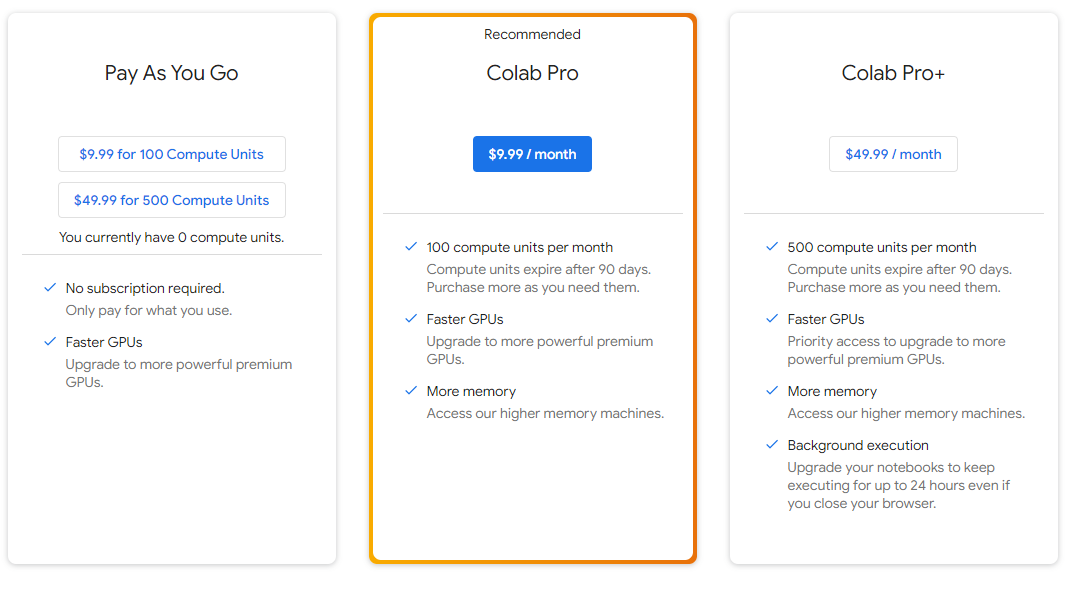

They also optionally offer the Google Colab Pro service as shown below:

The paid plans include on-demand pay as you go option and Colab Pro which grants faster GPU models and compute units which allow you to use VMs for your IDE. Finally there is Colab Pro+ which allows for background execution, meaning you can leave your notebook running without having to have the tab open on your browser.

Full Comparison

| Kaggle Kernels | Google Colab | |

| Made by Google? | Yes | Yes |

| Free Tier | Yes | Yes |

| Free Tier GPU | 1 x NVIDIA Tesla P100 | 1 x Nvidia K80s, T4s, P4s and P100s |

| Free Tier Session Limitations | 9 hours (restarts after 60 mins) | 12 hours (restarts after 90 mins) |

| Language Support | Python, R | Python, Swift |

| Download Notebooks? | No | No |

| Cost | Free | Free or $9.99/mo |

| JupyterLab or IDE | IDE only | IDE only |

| API Access? | No | Yes |

| Link sharing? | Yes | Yes |

Best Cloud GPUs for Deep Learning

1. Paperspace

Key Features

- Gradient Notebooks: These are a more sophisticated and enhanced version of Jupyter Notebooks.

- Highly Flexible Environment: Allows you to customize your setup and workloads to best suit your needs.

- Highly Flexible Pricing: Provides a range of options to fit any budget.

- Free GPUs Offered with Quotas: A range of powerful GPUs available for free, on a limited basis.

- Perfect for Teams, Beginners and Mid-Level Projects: Allows users of all sorts the ability to develop and explore new ideas.

- Robust Features: Provides users with access to powerful and reliable tools and capabilities.

- Simple Interface: This makes it easy for users to quickly and efficiently access their resources.

- AI-Focused: Enables users to implement the latest AI technologies and workflows.

- Intuitive Guides and Documentation: This makes it easier for users to get up and running quickly.

- Essentially Every GPU Type Available: Gives users access to the latest hardware and technologies to help their work.

Developed by Paperspace, CORE is a fully managed cloud GPU platform that provides straightforward, cost-effective, and accelerated computing for a variety of applications.

Paperspace CORE stands out for its user-friendly admin interface, powerful API, and desktop access for Linux and Windows systems. Plus, it offers great collaboration features and unlimited computing power for even the most demanding deep learning tasks.

It has the widest range of cost-effective, high-performance NVIDIA GPUs connected to virtual machines; all pre-loaded with machine learning frameworks for fast and easy computation.

For a variety of applications, Paperspace’s CORE cloud GPU platform provides simple, economical, and accelerated computing.

Moreover, they provide Gradient Notebooks

The Paperspace Gradient Platform

With the help of robust Paperspace machines, Gradient Notebooks offers a web-based Jupyter interactive development environment. The Gradient platform is an MLOps platform that offers a seamless path to scale into production, a greater selection of free and commercial GPU/IPU workstations, and a better startup experience as compared to competing cloud-based notebooks. Gradient Notebooks with free GPUs are beginners’ go-to for simple, experimental projects.

GPU Locations

Paperspace is mainly situated in North America and Europe. More on their locations here.

| Region Name | Country | Location |

|---|---|---|

| NY2 | United States | Near New York City |

| CA1 | United States | Near Santa Clara |

| AMS1 | The Netherlands | Near Amsterdam |

GPU Types and Pricing

Paperspace boasts a plethora of GPU models with flexible pricing as shown below:

| Name | GPU (GB) | RAM (GB) | Price (hourly) | Price (monthly) | Regions |

|---|---|---|---|---|---|

| GPU+ (M4000) | 8 | 30 | $0.45/hr | $269/mo | NY2 CA1 |

| P4000 | 8 | 30 | $0.51/hr | $303/mo | All |

| P5000 | 16 | 30 | $0.78/hr | $461/mo | All |

| P6000 | 24 | 30 | $1.10/hr | $647/mo | All |

| RTX4000 | 8 | 30 | $0.56/hr | $337/mo | All |

| RTX5000 | 16 | 30 | $0.82/hr | $484/mo | NY2 |

| A4000 | 16 | 45 | $0.76/hr | $488/mo | NY2 |

| A5000 | 24 | 45 | $1.38/hr | $891/mo | NY2 |

| A6000 | 48 | 45 | $1.89/hr | $1,219/mo | NY2 |

| V100 | 16 | 30 | $2.30/hr | $1,348/mo | NY2 CA1 |

| V100-32G | 32 | 30 | $2.30/hr | $1,348/mo | NY2 |

| A100 | 40 | 90 | $3.09/hr | $1,994/mo | NY2 |

| A100-80G | 80 | 90 | $3.19/hr | $2,048/mo | NY2 |

Multi-GPU Instances

Paperspace CORE provides specialized GPU machines in multi-GPU variants with up to 8 GPUs. From the base machine type, the specifications and cost can be doubled, quadrupled, etc. Details on the available machines are listed below.

- P4000x2, P4000x4

- P5000x2, P5000x4

- P6000x2, P6000x4

- RTX4000x2, RTX4000x4

- RTX5000x2, RTX5000x4

- V100-32Gx2, V100-32Gx4

- A4000x2, A4000x4

- A5000x2, A5000x4

- A6000x2, A6000x4

- A100x2, A100x4, A100x8

- A100-80Gx2, A100-80Gx4, A100-80Gx8

Windows-only Virtual GPUs

These are not appropriate for graphics- or compute-intensive applications because they are designed for general-purpose desktop computing. The NVIDIA GRID “virtual” GPU, which is the GPU in this family, lacks support for CUDA, OpenCL, OpenGL, and other capabilities necessary for high-end GPU tasks.

| Name | GPU (GB) | vCPUs | RAM (GB) | Price (hourly) | Price (monthly) | Regions |

|---|---|---|---|---|---|---|

| Air+ | 0.5 | 3 | 4 | $0.07/hr | $23/mo | All |

| Standard | 0.5 | 4 | 8 | $0.10/hr | $36/mo | All |

| Advanced | 2 | 6 | 16 | $0.18/hr | $66/mo | All |

| Pro | 4 | 8 | 32 | $0.32/hr | $121/mo | All |

To take a detailed look at their pricing, go to their pricing page here.

Ease of Use

Paperspace and its byproduct, Gradient Notebooks are incredibly easy to setup and use, with no pricing or initialization burdens. In fact, notebooks are offered with free GPUs, although these can be limited by quotas.

Besides its simplicity, the service offers a functional user interface, tons of documentation and guides and ML oriented pre-installations.

2. Google Cloud Platform (GCP)

- Worldwide server network

- Impressive speeds

- Competitive Pricing

- Easy integration with other Google services

- Various types of GPUs

- Highly customizable

- 2.93$ per hour for NVIDIA A100

- Entry barrier high

- AI Platforms and DLVMs (Deep Learning Virtual Machines)

- $300 credit for transfers upon account creation

If your company is looking for an infrastructure-as-a-service (IaaS) platform, you’ve probably heard of major names like Microsoft Azure and Amazon’s AWS.

Google Cloud’s GCE (Google Compute Engine) is Google’s approach to IaaS. While it’s not nearly as robust as AWS yet, Google Cloud has developed into a respectable cloud computing platform. Some notes to mention about GCP are:

- An IaaS offering that offers remote computing and cloud storage.

- Cloud storage is quite simple to utilize.

- It can be challenging to understand Google Cloud’s pricing structure, which can lead to problems later on. As a result, you might pay unforeseen costs.

- One of the greatest IaaS security systems available today, Google even offers zero-knowledge encryption for buckets.

GCE (Google Compute Engine)

High-performance GPU servers are available through Google Compute Engine (GCE) for computing-intensive tasks.

Users of GCE can add GPU instances to both new and pre-existing virtual machines, and TensorFlow processing (TPU) is available for even more quickly and affordably computing.

Its primary features include per-second pricing, a user-friendly interface, and simpler interaction with other relevant technologies. A variety of GPU models, including NVIDIA’s V100, Tesla K80, Tesla P100, Tesla T4, Tesla P4, and A100 for varying cost and performance needs, are also included.

The cost of GCE varies and is determined by the location and the needed computing power.

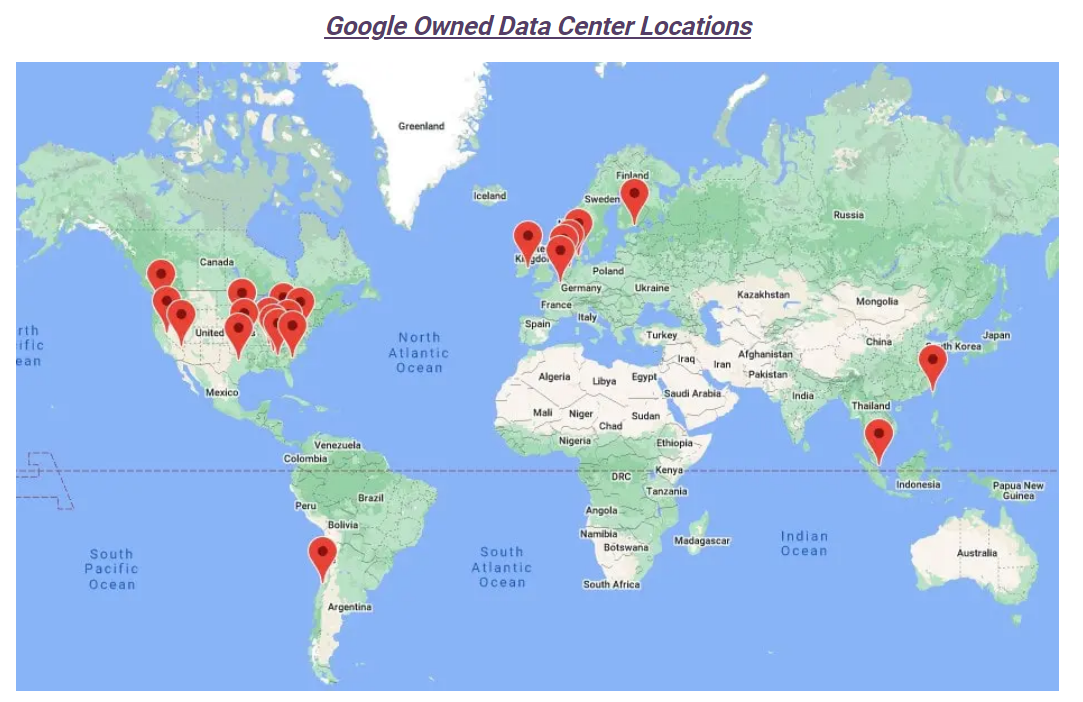

GPU Locations

Google has locations around the globe, as shown below. For further details on GPU locations, visit this link.

For further details on location, visit this link.

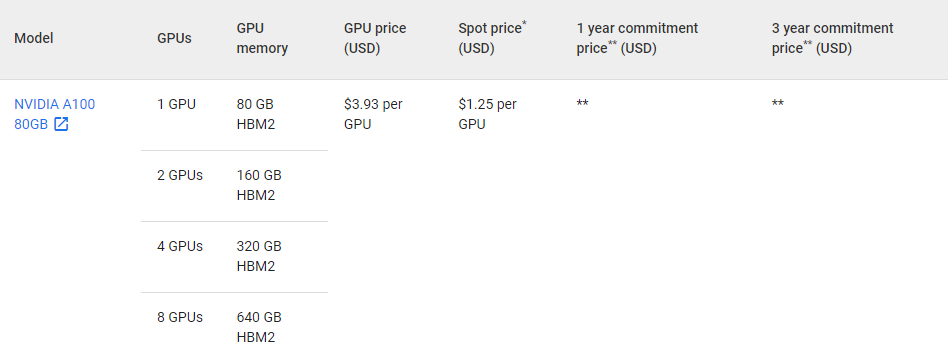

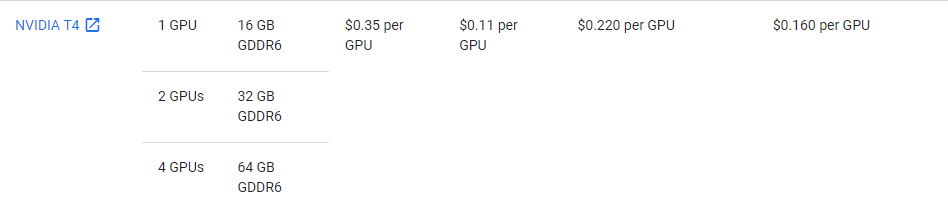

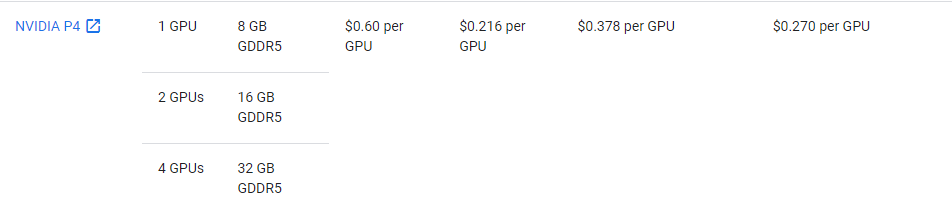

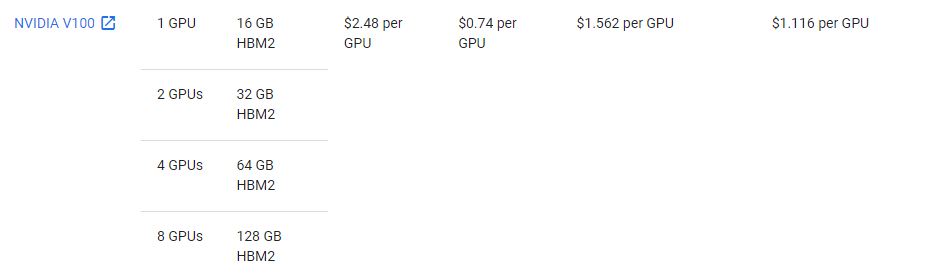

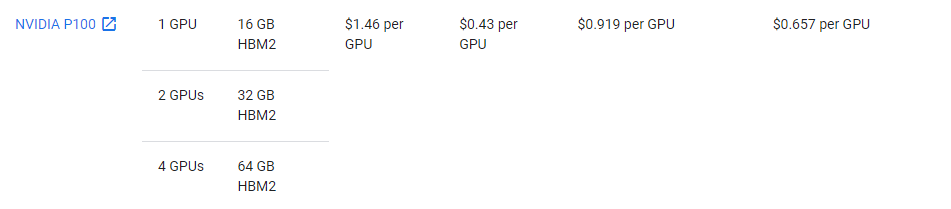

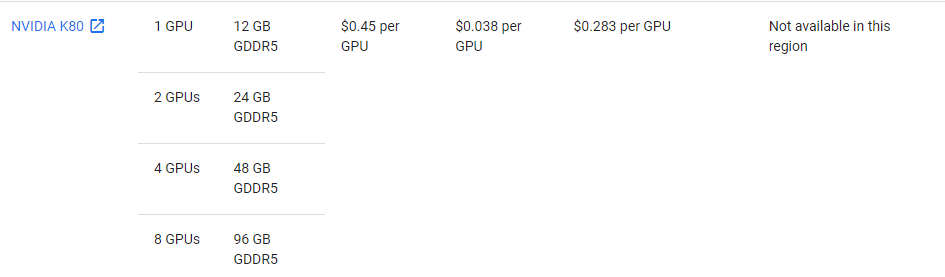

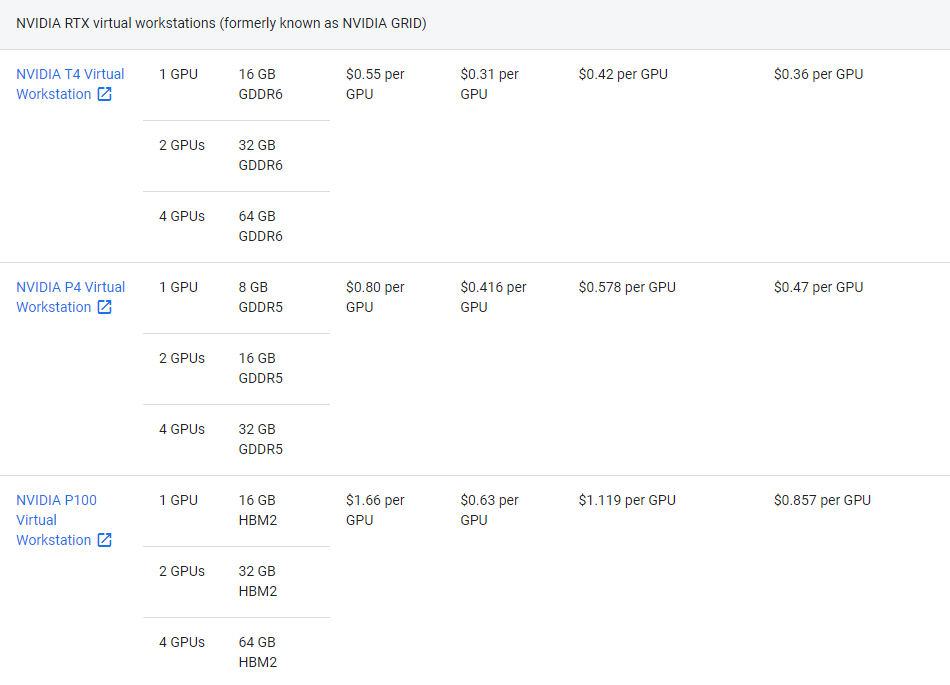

Types of GPUs and Pricing

Go here to see GPU pricing: https://cloud.google.com/compute/gpus-pricing

The prices of GPU dedicated servers vary depending on the location. For this example, we are using Iowa as the data center location to compare prices.

1. NVIDIA A100 80GB

2. NVIDIA A100 40GB

3. NVIDIA T4

4. NVIDIA P4

5. NVIDIA V100

6. NVIDIA P100

7. NVIDIA K80

8. NVIDIA RTX Virtual Workstations

9. Cloud TPUs

You are also able to rent cloud platforms with dedicated TPUs, however, the pricing for them is quite complex and is better read and understood on their official website here.

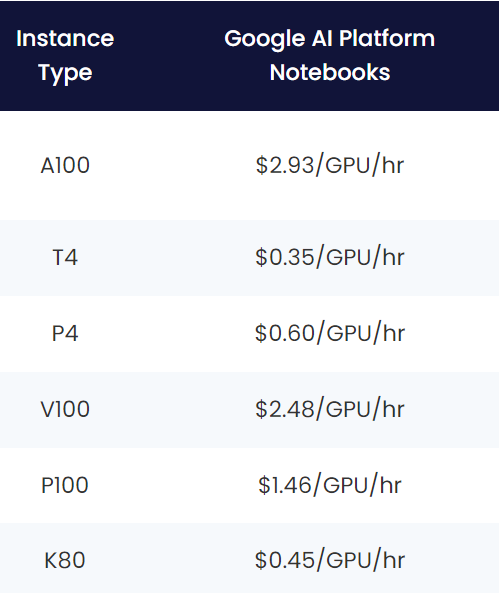

10. Google AI Platform Notebooks Pricing

Ease of Use

The setup process of Google Compute Engine is varied across the board. There are many options to consider, locations to solidify and GPU or TPU types to evaluate. The pricing range and the options displayed make it a daunting task for beginners.

However, their AI Platform notebooks are the best starting point:

- Create a GCP Account.

- Go to the GCP Console to enable billing.

- Add credit card and validate it.

- Go back to GCP Console, and select from the sidebar: AI > AI Platform > Notebooks

- Create an instance and apply customization if you prefer and finally run the notebook to have a fully functional JupyterLab support on GCE.

GCP/GCE Notable Features

DLVM (Deep Learning VM Images)

These are GCP’s data science images. They comes pre-installed with TensorFlow, PyTorch, scikit-learn and more.

The fundamental building block of the cloud is a deep-learning virtual machine, or DLVM. If you’ve ever used Azure or AWS, a DLVM and a virtual machine are identical.

A DLVM is comparable to your home computer. Each DLVM is assigned to a single user because DLVMs are not meant to be shared (although each user may have as many DLVMs as they wish). Additionally, each DLVM has a special ID that is used for logging in.

When it comes to computational options, DLVMs can be virtually fully customized.

The DLVM is only charged while it is active. It is recommended to switch off your DLVMs at the end of the day since the cost is the same whether they are in use or not.

Storage

What initially entices the user to Google Cloud is its limitless data storage at affordable prices. Remember to keep in mind that these prices also depend on the region.

In Iowa, the cloud storage costs per month are 0.020$ per GB for standard, 0.010$ for nearline, 0.004$ for cold storage and 0.0012$ for archival.

On-Registration 90 DAY FREE CREDIT ($300)

Once you sign up, you are given a trial period that lasts 90 days until you have to pay a subscription fee at the end. You are also given 5GB of storage and free 300$ credit, which you are able to run instances.

Additional Features Worth Mentioning

- Storage — Google’s cloud-based digital storage

- BigQuery — Big data analytics

- Compute Engine — Remote computing tool and VMs

- Cloud Functions — Task automation

- App Engine — Web applications platform

- VertexAI — Google’s collective machine learning suite

- Database tools — Built-in management tools for databases

- Micro VPS instances: Virtual private servers that are simple to customize.

- Networking tools – Control the network settings of other users, including VPNs, DNS, and the sharing of data.

Micro VPS instances: Virtual private servers that are simple to customize.

Networking tools – Control the network settings of other users, including VPNs, DNS, and the sharing of data.

3. AWS EC2

- Choice of operating systems and software includes Linux, Windows and Apple.

- Pay only for utilized services

- Optimized storage for workloads

- On-demand, c6g with 4GiB memory, EBS storage and 10 GiB network performance for one hour costs 0.068$

- Dedicated general host a1 general costs 0.449$ per hour

- Jupyter integration (SageMaker)

- Free tier with only CPU compute

- Advanced networking

- Robust, global infrastructure

- Highly reputable and flexible

- Machine Learning support

- Higher than average barrier for entry and cost planning (comes with pricing calculator)

Many Amazon Web Services (AWS), as well as the early offers that gave rise to contemporary cloud computing, are built on Amazon’s Elastic Compute Cloud (EC2) platform. From two alternatives in 2006 to a multitude of options now, Amazon EC2 has grown tremendously. These alternatives are based on the required CPUs, system memory, operating systems, and auxiliary hardware for networking and storage.

Amazon EC2 instances can be started or stopped as needed, demonstrating the name’s reference to flexibility. With AWS, businesses can cease investing in hardware and only pay for the compute time they use on an hourly or minute-by-minute basis.

Amazon EC2 is one of the main Cloud GPU providers and is undoubtedly a secure choice, with features such as:

- Instances, or virtual computing environments

- Amazon Machine Images (AMIs), which are pre-configured templates for your instances and package the components you require for your server (including the operating system and additional software)

- The various CPU, memory, storage, and networking settings for your instances are referred to as instance types.

- Using key pairs, safeguard your instances’ login information (AWS stores the public key, and you store the private key in a secure place)

- Instance store volumes are storage volumes for transient data that disappears when you suspend, hibernate, or terminate your instance.

- Amazon Elastic Block Store (Amazon EBS)-based persistent storage volumes for your data, also known as Amazon EBS volumes

- Regions and Availability Zones for different physical locations tailored towards your resources, including instances and Amazon EBS volumes.

- Firewall that lets you use security groups to define the protocols, ports, and source IP ranges that can access your instances

- Elastic IP addresses, or static IPv4 addresses, are used in dynamic cloud computing.

- You can create and apply tags, or metadata, to your Amazon EC2 resources.

- Virtual private clouds are virtual networks you can build that are logically separate from the rest of the AWS Cloud and that you can optionally link to your own network (VPCs)

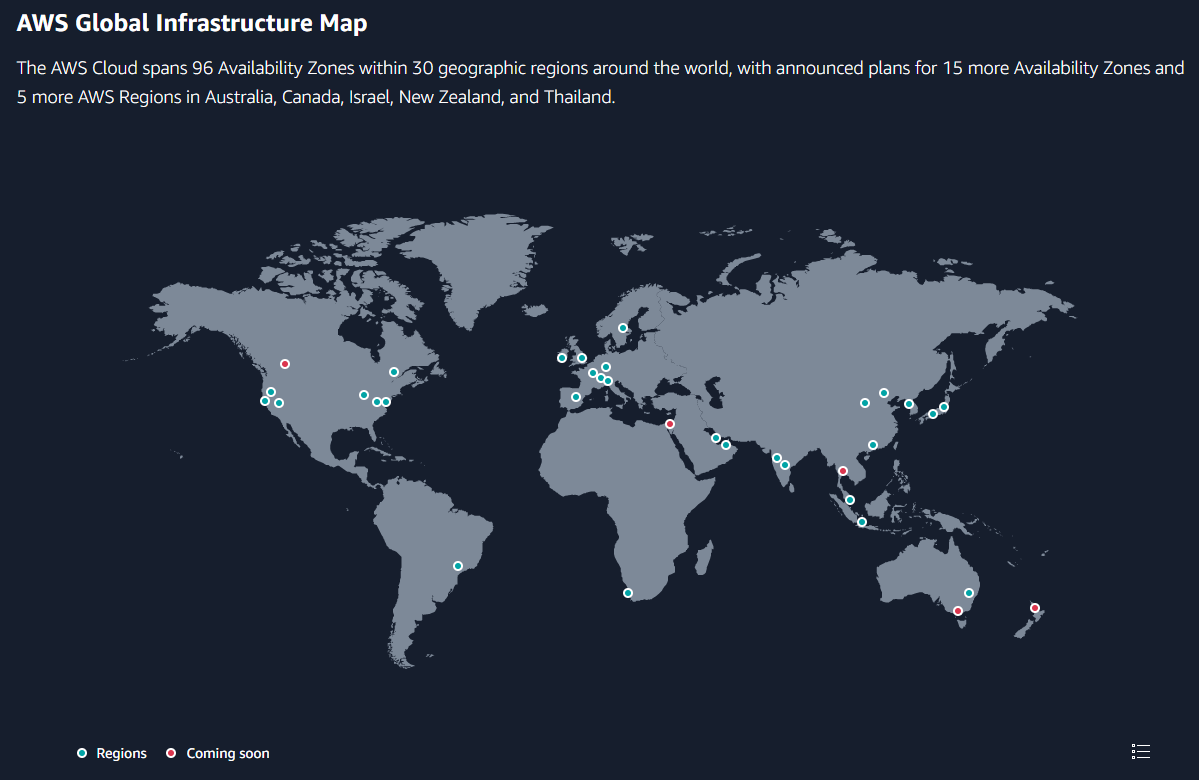

GPU Locations

AWS EC2 has a global infrastructure with 30 launched regions, 96 availability zones and 410 points of presence, including Europe, Asia, Middle East, Africa, America. Take a look at their detailed map here.

EC2 is available around the globe as shown below:

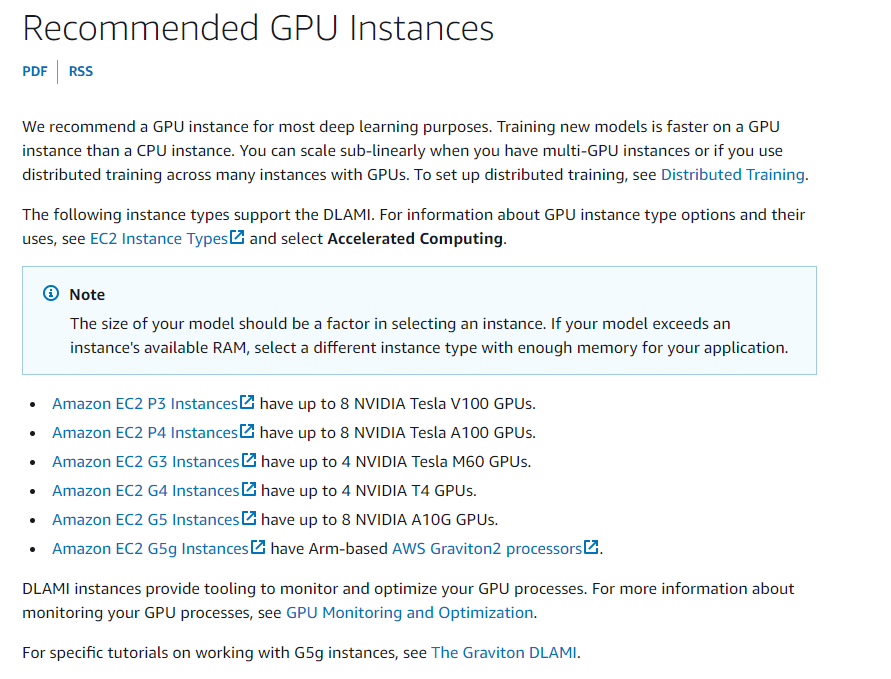

GPU Types and Pricing

For deep learning, their P and G instances are recommended due to their NVIDIA GPU integration. To see pricing yourself, click on one, and scroll down until you get to the pricing table.

- Amazon EC2 P3 Instances have up to 8 NVIDIA Tesla V100 GPUs.

- Amazon EC2 P4 Instances have up to 8 NVIDIA Tesla A100 GPUs.

- Amazon EC2 G3 Instances have up to 4 NVIDIA Tesla M60 GPUs.

- Amazon EC2 G4 Instances have up to 4 NVIDIA T4 GPUs.

- Amazon EC2 G5 Instances have up to 8 NVIDIA A10G GPUs.

- Amazon EC2 G5g Instances have Arm-based AWS Graviton2 processors

For a P3 instance, sample prices are shown below:

| GPU Instance | Allocations | GPU Memory | vCPUs | On-Demand Price |

| Tesla V100 | 1 | 16GB | 8 cores | $3.06/hr |

| Tesla V100 | 4 | 64GB | 32 cores | $12.24/hr |

| Tesla V100 | 8 | 128GB | 64 cores | $24.48/hr |

| Tesla V100 | 8 | 256GB | 96 cores | $31.218/hr |

Amazon offers a variety of GPUs in its P3 and G4 EC2 instances, making it one of the first major cloud providers to offer GPU cloud service. The Tesla V100 GPU, one of the most popular NVIDIA GPUs offered by many cloud providers, is available in Amazon’s P3 instance. It has 16 GB and 32 GB variants of VRAM per GPU. There are two different kinds of G4 instances: G4dn, which uses NVIDIA T4 GPUs with 16GB VRAM, and G4ad, which uses more potent AMD Radeon Pro VS20 GPUs with 64 vCPUs.

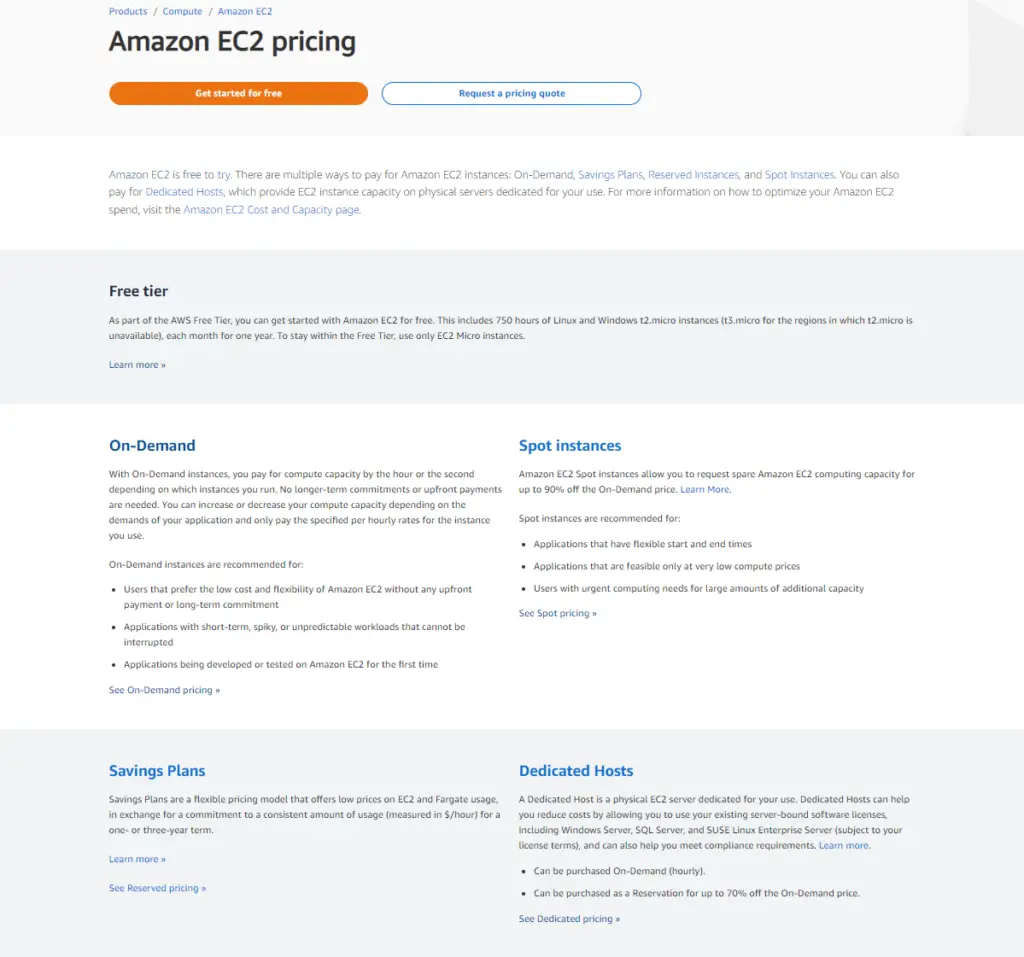

AWS EC2 pricing is rather complicated, with multiple dynamic pricing for each instance, with adjustments for customizations that are tailored towards your need. To get a general picture, AWS EC2 offers tiers of pricing plans.

It can get confusing when you’re trying to just find the prices for GPU instances. We’ll try to make it clearer in this section.

What are tiers?

You can find the tiers here https://aws.amazon.com/ec2/pricing/

1. Free Tier (Does NOT include GPUs)

The Free Tier plan aids in removing the cost barrier for cloud adoption. AWS Free Tier is accessible to everyone using the cloud, and they can test the features before committing long-term.

You are given 750 Amazon EC2 Micro Instance hours across every region you use. Some locations like Bahrain and Stockholm do not offer t2.micro instances, and so they offer t3.micro instances. Please keep in mind that storage isn’t included with this tier.

What are t2-micro instances?

T2 instances offer an economically viable, general purpose, baseline CPUs with possible burst in performance when needed. Using on-demand pricing, it starts at 0.0058$ per hour. T2 instances are generally the lowest-cost EC2 instances and usually goes hand-in-hand with low-latency micro-applications, small to medium databases, code repositories and more.

2. Spot Pricing

The next most affordable choice is Spot Pricing, which is just below the AWS Free Tier.

Spot Instances allow you to utilize the unused capacity of another person. Spot prices have significant discounts because of this. Spot Instances are perfect for short-term tasks like testing and development.

When Amazon EC2 wants the capacity back, Spot Instances may also experience outages. However, you will be informed two minutes beforehand. This means that in order to run Spot Instances, you will need fault-tolerant apps.

3. Savings Plan / Reserved Instances

You can set aside AWS resources for later use using this pricing structure. But what is the price? Due to the fact that expenditures are being accumulated over several years, it is typically more expensive than spot pricing. On this plan, AWS offers discounts up to 70%.

Standard and convertible reserved instances are both available from AWS for EC2. Convertible Reserved Instances are the greatest option because they offer more flexibility.

AWS is aware that spending large payments all at once can be challenging. Its on-demand rates allow you to pay as you go because of this. Prices for items on-demand can range from a 27% to a 35% premium over those for reserved items. Let’s look at some of the use cases for on-demand pricing before getting into the specifics of cost.

Typically, you want on-demand instances if you’re working on short-term projects. On-demand preferred scenarios include:

- when you are working inconsistently.

- when performing tasks that shouldn’t be interrupted, like a migration process.

- when your schedule is unpredictable and you’re seeking flexibility.

For Dedicated Hosts, you can choose on-demand or reserved pricing. The costs of various dedicated hosts are listed in a table provided by AWS. Because dedicated hosts give you more control and aid in regulatory compliance, it is more expensive.

Ease of Use

Amazon EC2 is quite hard to “enter.” There are terminologies and guide books that are complicated and hard to get used to. The setup process and price control aspect of this service is complex.

But given enough time and experience, AWS EC2 is undoubtedly the most flexible, dynamically priced, and widely varied cloud GPU platform that can fit any and all needs for everyone looking to perform deep learning tasks online.

They have piles upon piles of handbooks, and there are countless AWS EC2 setup guides scattered around the internet that will assist you in your initialization phase.

Afterward, the AI support and convenient server locations, and adjustable pricing will surely save you tons of headaches in the long term.

4. Azure (Azure N series)

- Extremely flexible

- Deep learning support

- Azure Notebooks integration

- Large variety of GPUs

- Global infrastructure

- Entry barrier high

Azure is developed by Microsoft for all your cloud platform needs.

The most GPU options are available on Azure. Although that doesn’t guarantee that these GPUs will be offered when you need them, Microsoft deserves praise for providing a wide range of alternatives. Azure might be a wise choice if hyperscale GPU computing is your top priority.

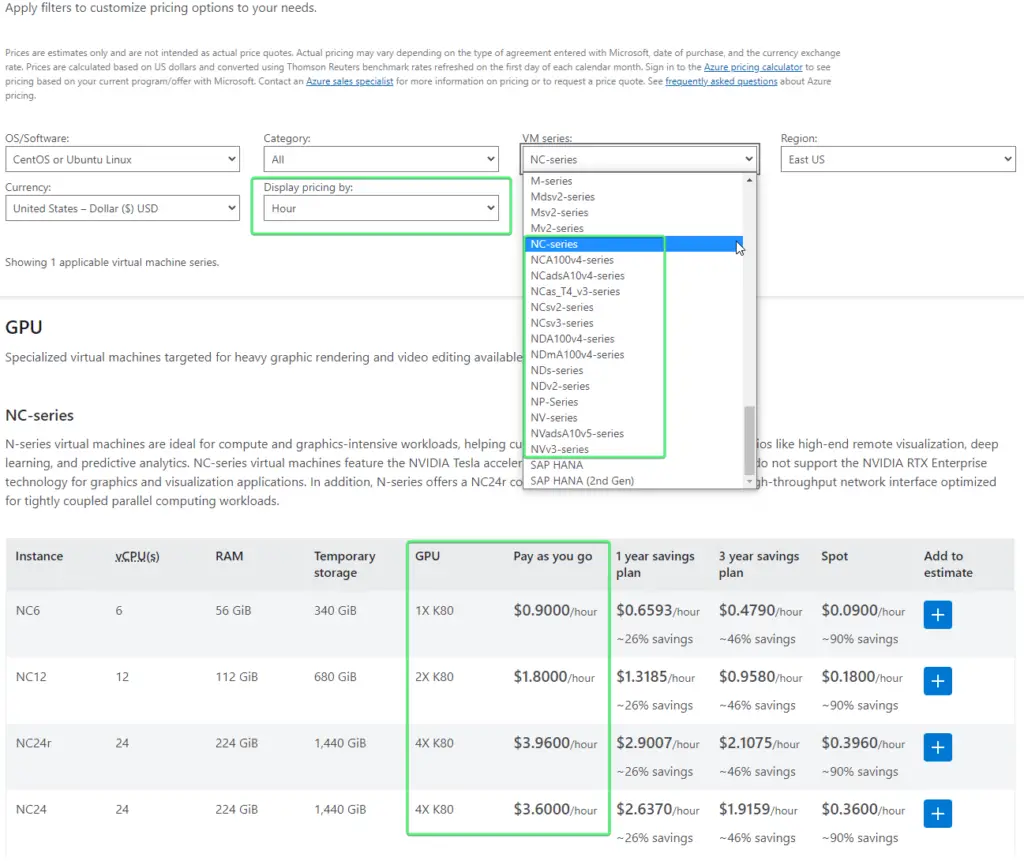

In Azure, virtual machines (VMs) are available in families or series of predetermined sizes. The term “instance” is frequently used to describe a single VM. Different VM families come with a specific number of CPU cores and GB of RAM and are built for particular use-cases. As with Hyper-V and VMware, it is not possible to haphazardly mix and match CPU cores and GB of RAM. Therefore, when planning the unique IT environment you are implementing in Microsoft Azure, it is crucial to comprehend the VM Series you wish to deploy.

VMs with GPU support

A family of Azure Virtual Machines with GPU support is called the N-series. With applications including high-end remote visualization, deep learning, and predictive analytics, GPUs are perfect for compute- and graphics-intensive tasks, assisting clients in fostering innovation.

There are three variants in the N-series where each caters to various workloads:

- High-performance computing and machine learning workloads are the main topics of the NC-series. The Tesla V100 GPU is included in the NCv3.

- The ND-series focuses on deep learning training and inference scenarios. NVIDIA Tesla P40 GPUs are employed. The NVIDIA Tesla V100 GPUs are used in the NDv2.

- The NVIDIA Tesla M60 GPU-powered NV-series provides robust remote visualization workloads and other graphically demanding applications.

For detailed view of each instance, take a look at this page.

GPU Locations

Azure services are available around the globe.

Take a look at their global infrastructure here.

For a more compact view without fancy graphics, visit this page.

GPU Types and Pricing

Quickly check the types and pricing yourself by going here, scroll down until you reach the dropdown, and under VM series check out the ones starting with the letter N (N Series). By default pricing is shown at a monthly basis – you can change it in the Display pricing by dropdown.

Azure offers customers the ability to buy and use computing as spot VMs, pay-as-you-go, and reserved instance options. Each Azure pricing model has advantages and disadvantages, and how you balance and use these options for the best performance will depend on the workload requirements. All of these settings may be used alone or in combination. The amount of compute capability you get is the same regardless of the pricing type you select.

1. Spot Instances

Customers can acquire virtual machines (VMs) from a pool of unutilized spare capacity with Azure spot VMs for a price that is up to 90% cheaper than pay-as-you-go. The cheaper cost is contingent on the ability to remove these Azure spot instances with little advance notice if capacity demand rises or if additional instances are required to support reserved instances or pay-as-you-go clients. While running various types of workloads on Spot VMs, from stateless, non-production applications to large data, can result in significant cost savings, doing so can be hard for mission critical, production workloads that can’t afford a service interruption due to the disruption risk.

2. Pay-as-you-go instances

The capacity can be increased or decreased as needed, and you only pay (by the second) for what you need. There are no long-term commitments or upfront payments required. Although this option can be more expensive than Azure’s other pricing options, it frequently provides customers with the flexibility and availability required for unpredictable, mission-critical workloads.

3. Reserved instances

Customers can acquire computing capacity for 72% cheaper using Azure’s reserved virtual machine instances than they would with pay-as-you-go. A one or three-year commitment to a virtual machine in a certain area is required to receive the discount. Reserved VMs might be a good match for steady workloads and long-term applications that must run continuously.

Ease of Use

Parallel to AWS EC2, Azure is robust and industrial-quality but due to that, hard for beginners to dive in. There are many elements to consider such as location, series/family to choose, pricing computations, pricing plans, which product is the best for you et cetera.

Although, after the hurdle of getting situated and comfortable in the framework of Azure, customers will find out that they have every one of their cloud GPU needs taken care of, as every single tool and instance types and flexible pricing are there, ready to be utilized optimally.

5. Coreweave

- Highly qualified provider

- 10 different NVIDIA GPU models

- Kubernetes native

- High quality, premium service

- NVIDIA partnered

- AI Solutions included

- Largest deployment of A40s in North America

- USA only data centers

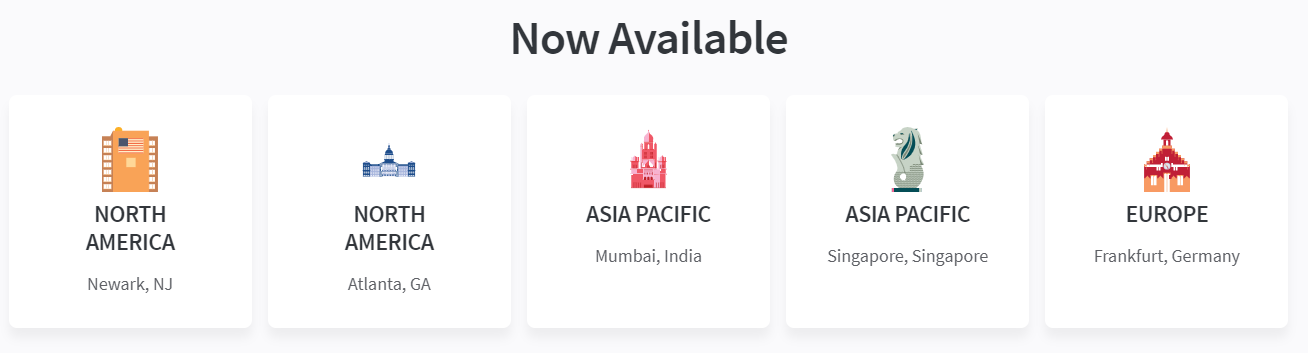

CoreWeave, a 2017 startup, offers GPU computing resources that boast a wide variety of NVIDIA GPU models (10+) and ultra low latency data centers in the USA. CoreWeave’s resources are quick, flexible, and highly available as they offer :

- GPUs on-demand, at scale

- CPU instances

- Containerized deployments (kubernetes)

- Virtual Machines

- Storage and networking

CoreWeave provides cloud services for compute-intensive projects, such as AI, machine learning, visual effects and rendering, batch processing, and pixel streaming. The infrastructure of CoreWeave is designed specifically for burst workloads, and it can scale up or down quickly.

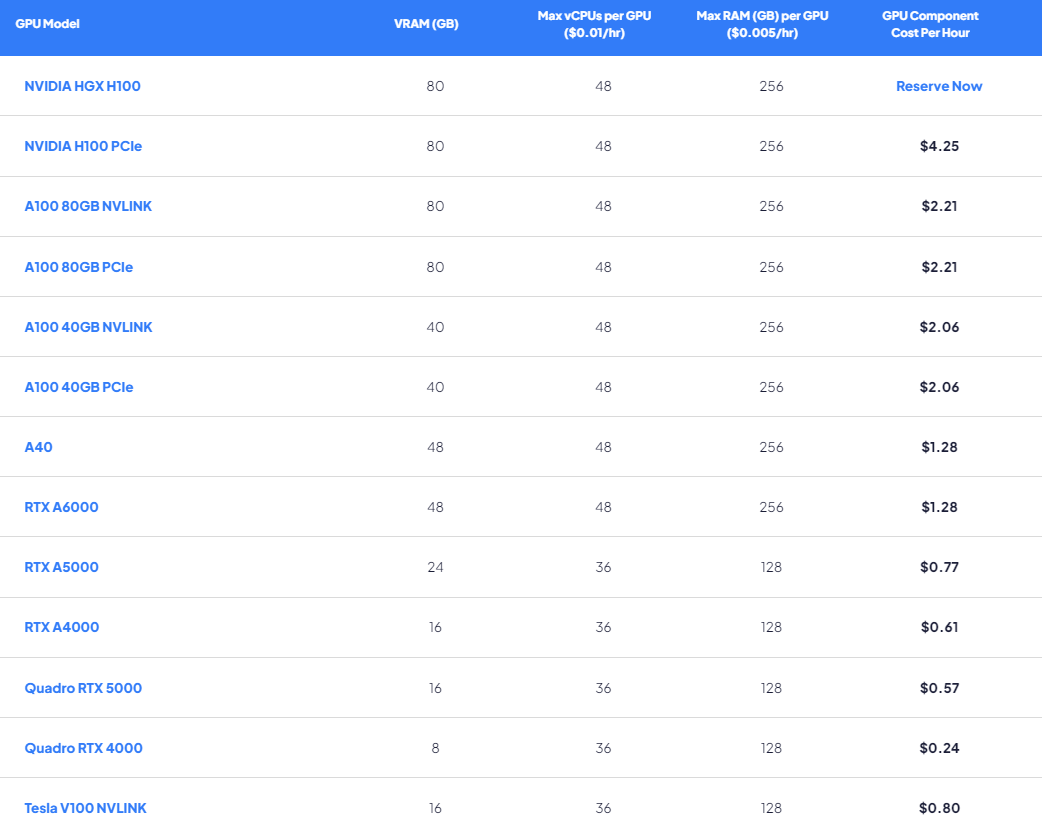

The reason for their highly distinct pricing and efficiency are due to the performance-adjusted cost structure of the company which consists of two parts.

First, CoreWeave cloud instances are extremely flexible, and clients only pay for the HPC resources they actually utilize.

Second, clients may use computation more effectively thanks to CoreWeave’s Kubernetes-native infrastructure and networking architecture, which also produces performance advantages, including industry-leading spin-up times and rapid auto-scaling features.

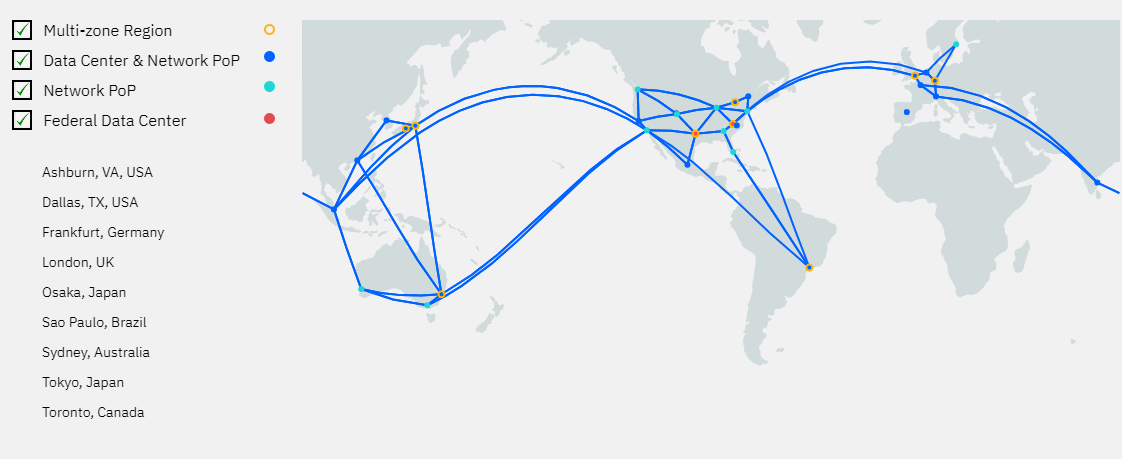

GPU Locations

CoreWeave has servers located all across the USA from US East, Central to West.

Take a detailed look at their locations here.

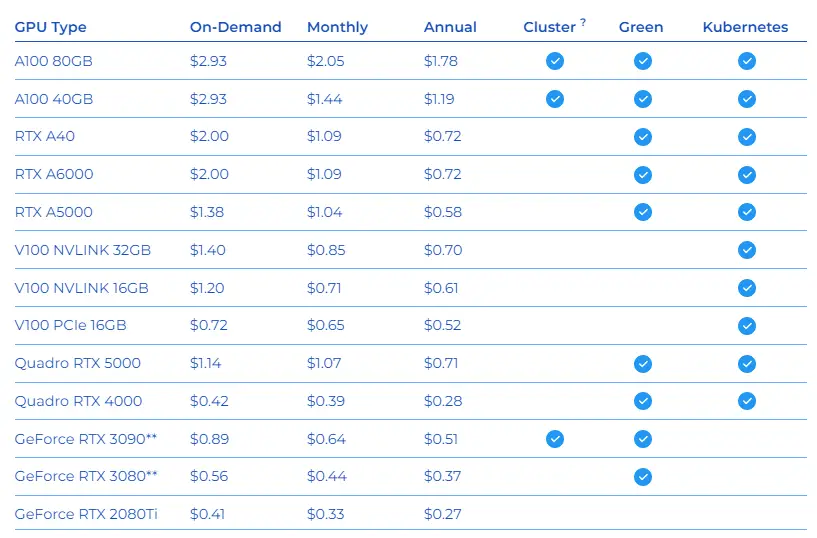

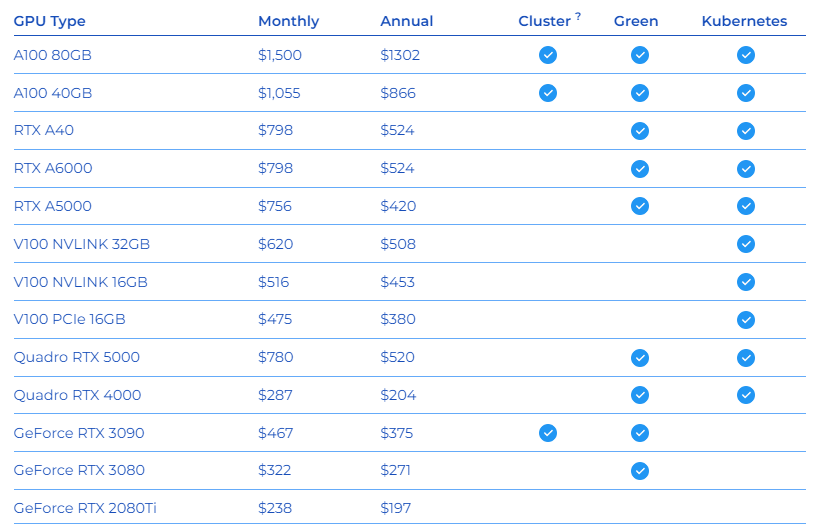

GPU Types and Pricing

CoreWeave offers over 10 GPU from NVIDIA.

Pricing for CoreWeave Cloud GPU instances is extremely flexible and designed to provide you complete control over both configuration and cost. The flat fee pricing shown below combines the costs of a GPU component, the number of virtual CPUs, and the amount of RAM allotted to create the overall instance cost. The GPU selected for your workload or Virtual Server is the only variable, keeping things straightforward. CPU and RAM costs are the same per base unit.

A GPU instance must have at least 1 GPU, 1 vCPU, and 2GB of RAM to be considered genuine. The GPU instance configuration must also have at least 40GB of root disk NVMe tier storage when a Virtual Server is deployed.

Check out the GPU types and prices here.

Ease of Use

Although the quality of CoreWeave services are undoubtedly premium level, the startup process can be a bit overwhelming due to the strict measures against fraud and other criminal activities. Of course, this offers better security for users from another perspective.

In terms of setup, CoreWeave can be considered intermediate level of setup, however, in terms of usage the service is flexible with pricing, comes pre-installed with Deep Learning capabilities (given that you’ve chosen the correct image) and extremely fast in terms of performance.

Unfortunately, for users outside of USA, the speed may be tuned down a notch due to the distance.

6. Linode

- Reliable, developer focused IaaS

- Simple UI

- Affordable storage

- Powerful API

- Easily scalable

- Affordable pricing

- AI support

- NVIDIA Quadro RTX 6000 only

- NVIDIA Quadro RTX 6000 32GB costs 1.50$ hourly

Linode is regarded as a trustworthy cloud service provider with 11 global data centers and over 1 million customers. This cloud hosting company with headquarters in Pennsylvania offers a range of services, such as web, VPS, internet hosting, and cloud computing. Customers can use its products in all of the approximately 196 countries that company serves.

The main offerings from Linode are computation, developer tools, networking, managed services, and storage, each with a separate price tag. It focuses primarily on providing SSD Linux servers to support a range of applications.

Because of its superior performance, Linode is regarded by developers as a dependable choice. When it comes to setting up a Linode cloud or virtual Linux server, it only takes a few seconds.

Additionally, Linode Manager use is advantageous for developers. With Linode manager, choosing a node’s placement, scaling resources, and Linux handling choices is also simple. Businesses can also manage several server instances through a single system by using NodeBalancer and Manager. The performance of Linode is undoubtedly enhanced by these features with weekly or monthly backups.

GPU Locations

Linode’s GPU servers are available in the following locations:

GPU Types and Pricing

Check out Linode’s GPU server pricing page.

1. Quadro RTX 6000

| CUDA Cores | 4,608 |

| Tensor Cores | 576 |

| RT Cores | 72 |

| GPU Memory | 24 GB GDDR6 |

| RTX-OPS | 84T |

| Rays Cast | 10 Giga Rays per Sec |

| FP32 Performance | 16.3 TFLOPS |

2. Pricing

Ease of Use

Linode’s UI is incredibly user friendly and setup process for its deep learning prepared GPU servers are quite straightforward to initialize. And due to the on-demand pricing, the service is also affordable for those who are interested.

Having only a single model of a GPU, however, potentially limits its flexibility.

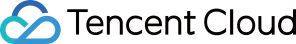

7. Tencent Cloud

- Offers AI solutions

- 1.73$ for Tesla V100

- Located around the globe

- Various GPUs offered

- Mainly focused for Asian locations

- GPU models varied

- Affordable costs

Tencent Cloud provides cloud GPU computing services that are fast, stable, and flexible across various rendering instances that use GPUs including the NVIDIA A10, Tesla T4, Tesla P4, Tesla T40, Tesla V100, and Intel SG1. Their services are available in the Asian cities of Guangzhou, Shanghai, Beijing, and Singapore.

Their high floating-point capability of GPU allows for optimized deep learning training and computing.

Tencent Cloud GPU instances of series GN6s, GN7, GN8, GN10X, and GN10XP to support deep learning training and inference. They provide pay-as-you-go instances that can be launched in their virtual private cloud and the instances allow free access to other services.

Types of GPUs and Pricing

Check out their pricing page here.

Using Tencent, you will able to utilize 5 models of GPUs as shown below from their official website.

1. Computing GN6S

| Instance Specification | GPU (Tesla P4) | GPU Video Memory | vCPU | Memory | Pay-as-You-Go |

|---|---|---|---|---|---|

| GN6S.LARGE20 | 1 | 8 GB | 4 cores | 20 GB | 1.11 USD/hr |

| GN6S.2XLARGE40 | 2 | 16 GB | 8 cores | 40 GB | 2.22 USD/hr |

2. Computing GN7

| Instance Specification | GPU (NVIDIA T4) | GPU Video Memory | vCPU | Memory | Pay-as-You-Go |

|---|---|---|---|---|---|

| GN7.LARGE20 | 1/4 vGPU | 4 GB | 4 | 20 | 0.49 USD/hr |

| GN7.2XLARGE40 | 1/2 vGPU | 8 GB | 10 | 40 | 1.01 USD/hr |

| GN7.2XLARGE32 | 1 | 16 GB | 8 | 32 | 1.26 USD/hr |

| GN7.5XLARGE80 | 1 | 16 GB | 20 | 80 | 2.01 USD/hr |

| GN7.8XLARGE128 | 1 | 16 GB | 32 | 128 | 2.77 USD/hr |

| GN7.10XLARGE160 | 2 | 32 GB | 40 | 160 | 4.03 USD/hr |

| GN7.20XLARGE320 | 4 | 64 GB | 80 | 320 | 8.06 USD/hr |

2. Computing GN8

| Instance Specification | GPU (Tesla P40) | GPU Video Memory | vCPU | Memory | Pay-as-You-Go |

|---|---|---|---|---|---|

| GN8.LARGE56 | 1 | 24 GB | 6 cores | 56 GB | 2.45 USD/hr |

| GN8.3XLARGE112 | 2 | 48 GB | 14 cores | 112 GB | 5.01 USD/hr |

| GN8.7XLARGE224 | 4 | 96 GB | 28 cores | 224 GB | 10.02 USD/hr |

| GN8.14XLARGE448 | 8 | 192 GB | 56 cores | 448 GB | 20.04 USD/hr |

3. Computing GN10X

| Instance Specification | GPU (Tesla V100-NVLINK-32G) | GPU Video Memory | vCPU | Memory | Pay-as-You-Go |

|---|---|---|---|---|---|

| GN10X.2XLARGE40 | 1 | 32 GB | 8 cores | 40 GB | 2.68 USD/hr |

| GN10X.4XLARGE80 | 2 | 64 GB | 18 cores | 80 GB | 5.43 USD/hr |

| GN10X.9XLARGE160 | 4 | 128 GB | 36 cores | 160 GB | 10.86 USD/hr |

| GN10X.18XLARGE320 | 8 | 256 GB | 72 cores | 320 GB | 21.71 USD/hr |

4. Computing GN10Xp

| Instance Specification | GPU (Tesla V100-NVLINK-32G) | GPU Video Memory | vCPU | Memory | Pay-as-You-Go |

|---|---|---|---|---|---|

| GN10Xp.2XLARGE40 | 1 | 32 GB | 10 cores | 40 GB | 1.72 USD/hr |

| GN10Xp.5XLARGE80 | 2 | 64 GB | 20 cores | 80 GB | 3.44 USD/hr |

| GN10Xp.10XLARGE160 | 4 | 128 GB | 40 cores | 160 GB | 6.89 USD/hr |

| GN10Xp.20XLARGE320 | 8 | 256 GB | 80 cores | 320 GB | 13.78 USD/hr |

A point to consider is the availability of the location of the GPU servers. For the most part, Tencent Cloud data centers are locally stated in China, as well as some parts of Asia.

GPU Locations

Check out locations here.

Tencent Cloud GPUs are mostly available in the Asian region of the globe. Please keep in mind when you’re choosing a data center if you upload your own datasets to the cloud to process, then having a server closer to you will make progress much quicker.

| Availability | Type | GPU | System | Location |

| Featured | PNV4 | NVIDIA A10 | CentOS 7.2 or later Ubuntu 16.04 or later Windows Server 2016 or later | Guangzhou, Shanghai, and Beijing |

| Featured | GT4 | NVIDIA A100 NVLink 40 GB | CentOS 7.2 or later Ubuntu 16.04 or later Windows Server 2016 or later | Guangzhou, Shanghai, Beijing, and Nanjing |

| Featured | GN10Xp | NVIDIA Tesla V100 NVLink 40 GB | CentOS 7.2 or later Ubuntu 14.04 or later Windows Server 2012 or later | Guangzhou, Shanghai, Beijing, Nanjing, Chengdu, Chongqing, Singapore, Mumbai, Silicon Valley, and Frankfurt |

| Featured | GN7 | NVIDIA Tesla T4 | CentOS 7.2 or later Ubuntu 14.04 or later Windows Server 2012 or later | Guangzhou, Shanghai, Nanjing, Beijing, Chengdu, Chongqing, Hong Kong, Singapore, Bangkok, Jakarta, Mumbai, Seoul, Tokyo, Silicon Valley, Virginia, Frankfurt, Moscow, and São Paulo |

| Featured | GN7 | vGPU – NVIDIA Tesla T4 | CentOS 8.0 64-bit GRID 11.1 Ubuntu 20.04 LTS 64-bit GRID 11.1 | Guangzhou, Shanghai, Nanjing, Beijing, Chengdu, Chongqing, Hong Kong, Silicon Valley, and São Paulo |

| Featured | GN7vi | NVIDIA Tesla T4 | CentOS 7.2–7.9 Ubuntu 14.04 or later | Shanghai and Nanjing |

| Available | GI3X | NVIDIA Tesla T4 | CentOS 7.2 or later Ubuntu 14.04 or later Windows Server 2012 or later | Guangzhou, Shanghai, Beijing, Nanjing, Chengdu, and Chongqing |

| Available | GN10X | NVIDIA Tesla V100 NVLink 32 GB | CentOS 7.2 or later Ubuntu 14.04 or later Windows Server 2012 or later | Guangzhou, Shanghai, Beijing, Nanjing, Chengdu, Chongqing, Singapore, Silicon Valley, Frankfurt, and Mumbai |

| Available | GN8 | NVIDIA Tesla P40 | CentOS 7.2 or later Ubuntu 14.04 or later Windows Server 2012 or later | Guangzhou, Shanghai, Beijing, Chengdu, Chongqing, Hong Kong, and Silicon Valley |

| Available | GN6 GN6S | NVIDIA Tesla P4 | CentOS 7.2 or later Ubuntu 14.04 or later Windows Server 2012 or later | GN6: Chengdu GN6S: Guangzhou, Shanghai, and Beijing |

Choosing the best type of instance

- A tick (✓) indicates that the model supports the corresponding feature.

- A star (★) indicates that the model is recommended.

| Feature/Instance | PNV4 | GT4 | GN10Xp | GN7 | GN7vi | GI3X | GN10X | GN8 | GN6 GN6S |

|---|---|---|---|---|---|---|---|---|---|

| Graphics and image processing | ✓ | – | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Video encoding and decoding | ✓ | – | ✓ | ★ | ★ | ★ | ✓ | ✓ | ✓ |

| Deep learning training | ✓ | ★ | ★ | ✓ | ✓ | ✓ | ★ | ★ | ✓ |

| Deep learning inference | ★ | ✓ | ★ | ★ | ★ | ★ | ★ | ✓ | ✓ |

| Scientific computing | – | ★ | ★ | – | – | – | ★ | – | – |

Ease of Use

Tencent Cloud is rather easy to get going once set up. However, the setup process requires some insight into the relevance of their server locations and which one best fits your needs, the type of instance you’re interested in. There are a lack of user experience citations outside of China (most of the reviews are translated).

Despite this, the pricing is quite competitive and the services offered are various.

Tencent also offers a Best Practices guide for deep learning on their servers.

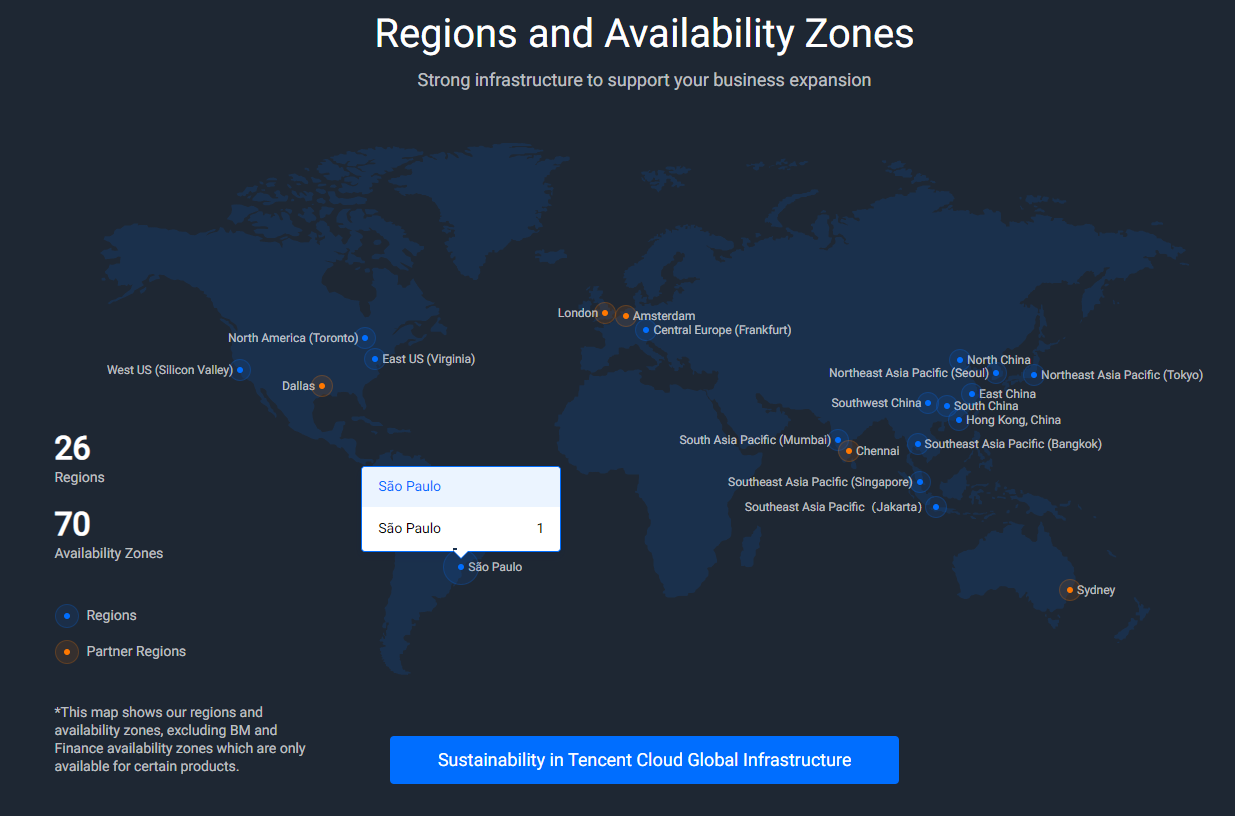

8. Lambda Labs

- Offers products (GPU servers, laptops, clusters etc)

- On-demand pricing

- Jupyter, Keras, TensorFlow et cetera (AI support)

- Multiple GPU variants

- 1.10$ per hour for NVIDIA A100

- Multi-GPU instances

- Specifically tailored for data science

Lambda Labs provides cloud GPU instances for deep learning model scaling from a single physical system to many virtual machines.

Major deep learning frameworks, CUDA drivers, and access to a dedicated Jupyter notebook are already pre-installed on their virtual machines. The web terminal on the cloud dashboard or directly using the supplied SSH keys are the two ways to connect to the instances.

The instances support up to 10Gbps of inter-node bandwidth for distributed training and scalability across numerous GPUs, thereby reducing the time for model optimization. They offer on-demand pricing and reserved pricing instances for up to 3 years.

GPU instances on the platform include NVIDIA RTX 6000, Quadro RTX 6000, and Tesla V100s.

GPU Locations

There are no locations listed on the official website but there is a list of ‘eligibility’ of regions.

Users from the following regions can use Lambda Cloud: USA, Canada, Chile, European Union, Switzerland, UK, Iceland, UAE, Saudi Arabia, South Africa, Israel, Taiwan, Korea, Japan, Singapore, Australia, and New Zealand.

GPU Types and Pricing

Check out LambdaLabs’ pricing page here.

Lambda Labs offers on-demand prices, therefore much cheaper options than its competitors.

Ease of Use

Lambda Labs is specifically designed for data science/deep learning tasks and comes with a clean and simple dashboard for the user to boot up and utilize their GPUs with. PyTorch, Python3, KERAS et cetera are all pre-installed and ready to go with their instances.

9. Genesis Cloud

- Simple dashboard

- Easy access

- Renewable energy sources for data centers

- 1.30$ average for GPUs offered (RTX 3090)

- Located around Europe and the USA

- Offers only NVIDIA Geforce RTX GPUs

- Offers AMD CPUs

- Tensorflow, PyTorch support

Genesis Cloud is a reliable GPU platform with affordable rates, located in Iceland, Germany, Norway and the USA.

Their services are cheap, scalable and robust, with unlimited GPU computing power, able to be used for graphics, deep learning, Big Data and more.

Genesis also offers free credits upon registration with discounts for longer-duration plans, as well as a public API with support for PyTorch and TensorFlow for deep learning.

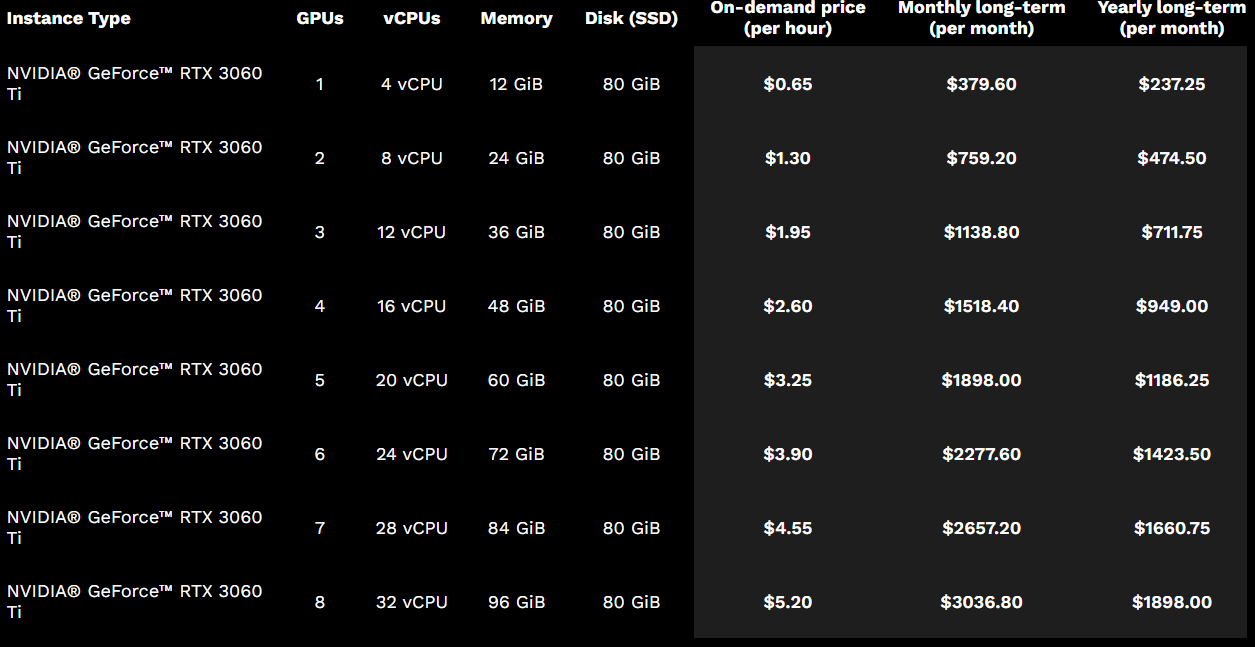

Types of GPU and Pricing

Check out Genesis Cloud GPU types and prices here.

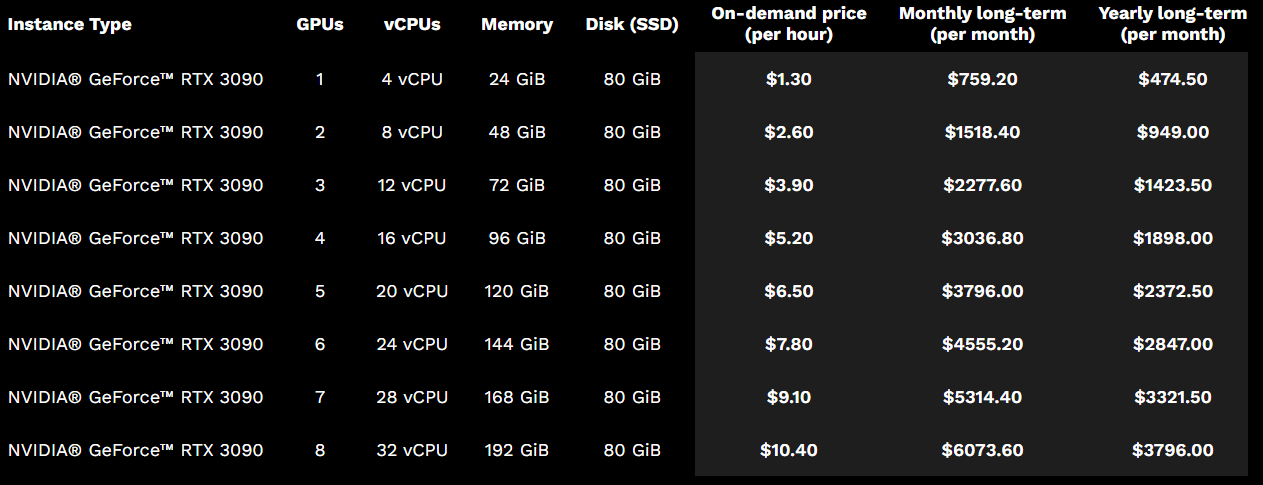

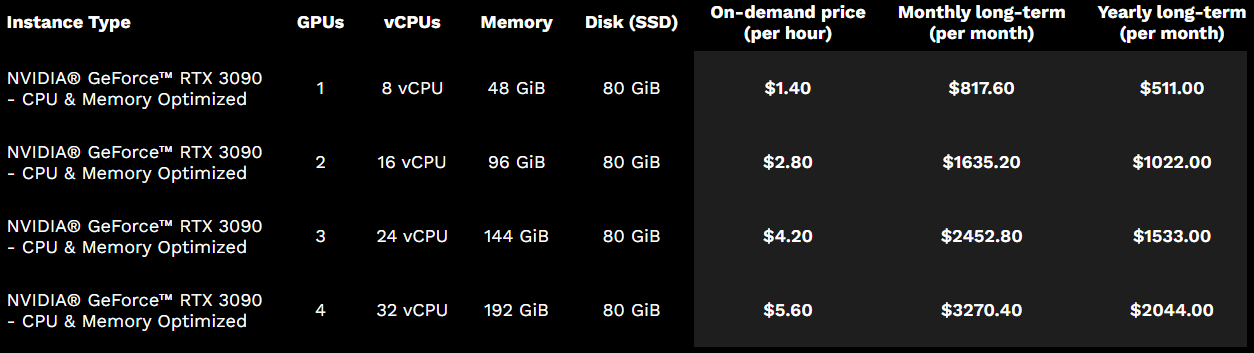

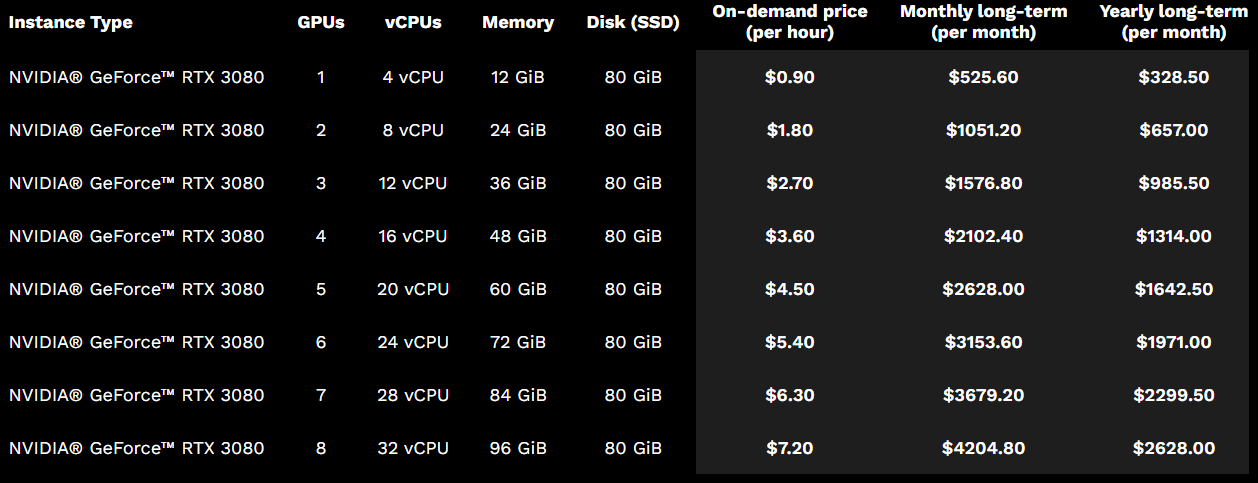

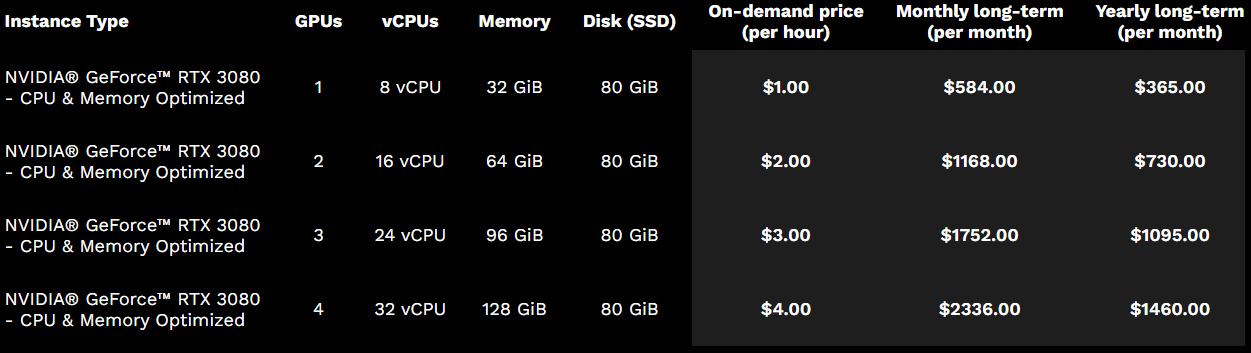

Using Genesis Cloud, you are able to utilize NVIDIA GPUs and AMD CPUs for various purposes, including deep learning. Their best option, GeForce RTX 3090, goes for 1.30$ per hour to $10.40.

You can also see that for long-term plans, the GPU usage price decreases significantly (divided, to as low as $0.65 per hour).

A note to keep in mind is that you may not want to go with long-term plans due to GPU releases being frequently introduced in recent times. This can lead to outdated plans that you are unable to or have to pay fees to cancel out of.

1. NVIDIA GeForce RTX 3090

2. NVIDIA GeForce RTX 3090 (Optimized)

3. NVIDIA GeForce RTX 3080

4. NVIDIA GeForce RTX 3080 (Optimized)

5. NVIDIA GeForce RTX 3060 Ti

Ease of Use

In terms of accessibility, it is quite easy to get signed up and set up the servers to connect.

A downside is the lack of Tesla V100 (specifically designed for data handling) in their GPU offerings list.

Once you sign up and setup an instance, things will go smoothly without any complications.

Additional Features

- TensorFlow, PyTorch support

- Public API

- Free credits on registration

- Snapshots for easy volume allocation

- Simple and flexible dashboard

- CPU instance

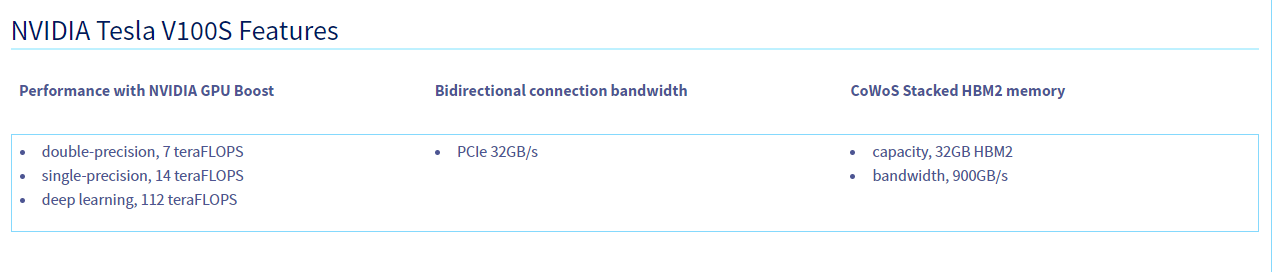

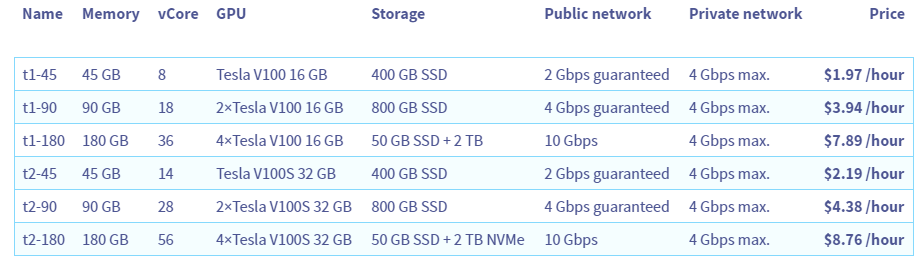

10. OVH Cloud

- High quality and robust

- Single type of GPU (NVIDIA V100)

- Offers AI solutions

- 1.97$ per hour for Tesla V100S

OVH Cloud is deemed as Europe’s biggest hosting provider and for no small reason. They host approximately 400,000 servers across 33 data centers on 4 continents. With more than 1.6 million customers registered and service quality up to ISO/IEC 27017, 27001, 27701 and 27018 verified standards, OVH can be trusted to keep your processes safe and private.

OVH Cloud is mainly used for its cloud hosting capability, and in terms of cloud based GPUs being offered, there isn’t too much variance.

Only the NVIDIA V100 GPU in 16 GB and 32 GB versions is available from OVH. This GPU was once regarded as one of the top deep learning accelerators, but the NVIDIA A100 (which comes in 40 GB and 80 GB alternatives) and a variety of other Ampere and higher GPU cards with superior price-to-performance ratios have surpassed it.

The data centers for OVH Cloud are spread across the entire globe with data centers in:

- France

- Canada

- USA

- Australia

- Germany

- Poland

- United Kingdoms

- Singapore

Types of GPU

OVH Cloud partners with NVIDIA but have in their offerings a single type of GPU, the Tesla V100S, which is actually quite robust and able to handle most deep learning tasks without any problems.

GPU Pricing

Check out OVH GPU pricing here.

The pricing is slightly more expensive than other options at 1.97$ per hour for a 45GB memory, 8 core Tesla V100 with 16GB.

Ease of Use

Much like other cloud GPU platforms, setting up the OVH Cloud GPU instance has a similar process. However, to set it up on a Windows image, you’d have to install NVIDIA drivers as shown in this guide.

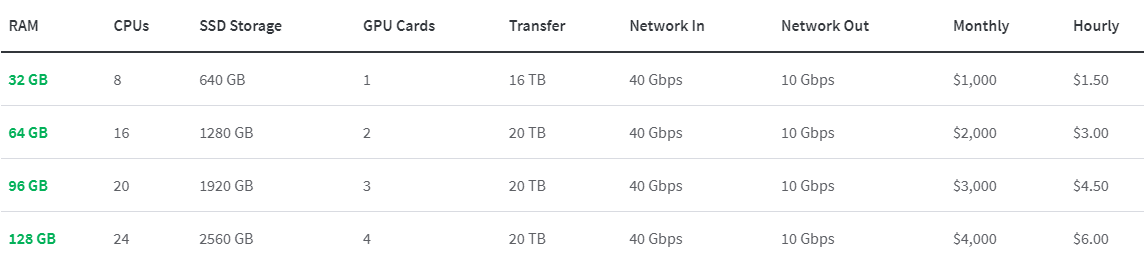

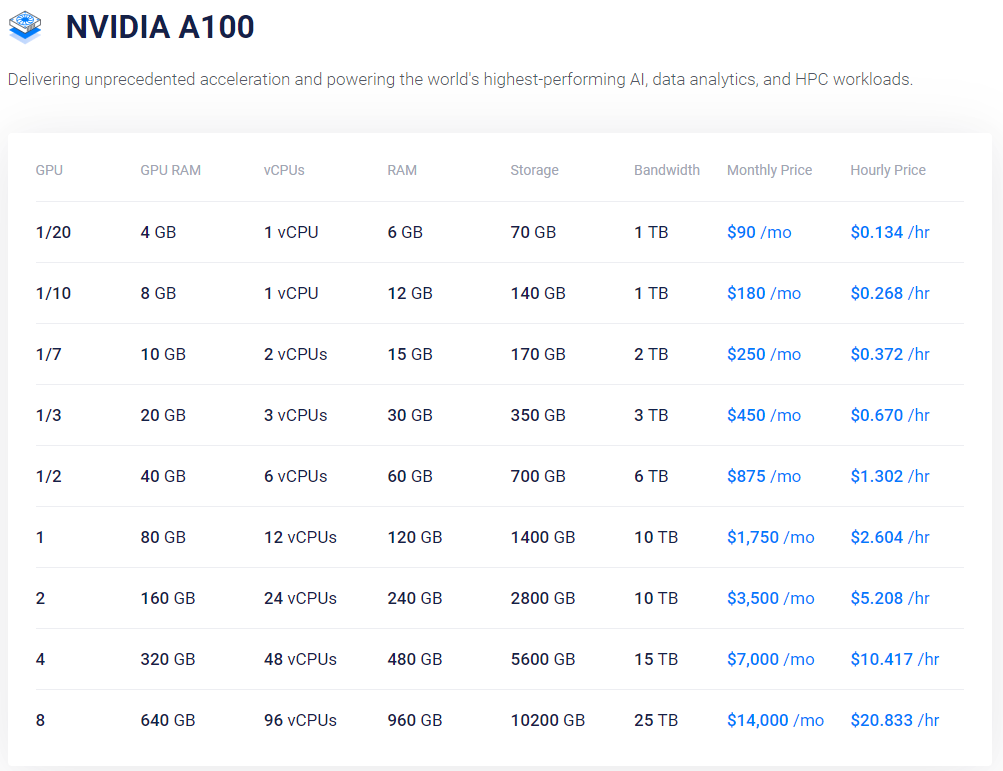

11. Vultr

- Cloud GPUs (partitions)

- Affordable pricing

- Variable types of GPU servers

- Globally available

- Offers AI services

- 1.34$ per hour for vGPUs (NVIDIA A100)

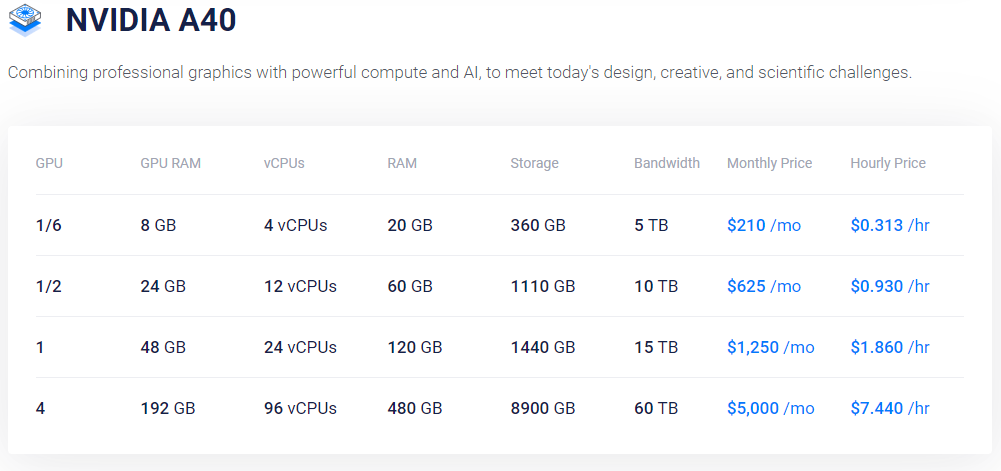

- Only NVIDIA A40 and A100

Unlike the majority of other GPU cloud providers, Vultr requires that you put up a 4x or 8x cluster of NVIDIA A100s in ‘bare metal’ instances in order to obtain dedicated GPUs. Some people could see this as a feature and others as a bug.

What they mainly utilize are ‘Cloud GPUs’ which are virtualized GPUs.

Traditionally, a server running on-premises or in the cloud with a fully-dedicated GPU operating in passthrough mode is needed for GPU applications. These remedies, however, come at a monthly cost of thousands of dollars. As an alternative, Vultr provides: By dividing cloud GPU instances into virtual GPUs (vGPUs), you can choose the performance level that best suits your workload and price range. NVIDIA AI Enterprise, which provides your server instance with a vGPU that resembles a physical GPU, powers vGPUs. Each virtual GPU has its separate dedicated memory partition and a commensurate amount of computational power for it. Virtual GPUs use the same operating systems, frameworks, and libraries as a hardware GPU.

These Cloud GPUs are quite cost-effective, but comes at the price of performance, with a full NVIDIA A100 vGPU going for 1.34$ per hour whilst a bare metal version costs nearly 10.4$ due to the above reasons.

To find out more about their GPUs, please go to this link.

GPU Locations

- Talon A100 GPU Servers – London, New York (NJ), Silicon Valley, Tokyo

- Talon A40 GPU Servers – London, Frankfurt, Los Angeles, New York (NJ), Tokyo, Bangalore, Mumbai, Singapore, Sydney

- Bare Metal (Dedicated) GPU Servers – I can’t tell as of writing this section (Jan 12th 2023). They’re all out of stock.

Types of GPU Servers

Vultr offers its users two variations of GPU servers:

- The traditional bare metal servers with a dedicated GPU that are single-tenant, separate and physical hardware working remotely for your applications and processing which can handle the heaviest workload.

- The personal use case cloud GPUs which are optimized via virtualized vCPU and vGPU resources that are ideal for data science, video encoding and graphics rendering et cetera.

Vultr offers exclusively NVIDIA A16, A40 and A100 GPU servers.

GPU Pricing

You can check Vultr’s GPU server pricing here.

1. Cloud GPU – NVIDIA A100

2. Cloud GPU – NVIDIA A40

3. Bare Metal – NVIDIA GPUs

Ease of Use

Vultr already comes pre-installed with all the CUDA and NVIDIA drivers, and the setup process is quick and simple.

Moreover, once you sign up, you are given 50$ credits for your personal usage.

Combined with the ‘flexibility’ of their allocated vGPUs, multiple locations, and simple user interface, Vultr is a strong contender in the cloud GPU market.

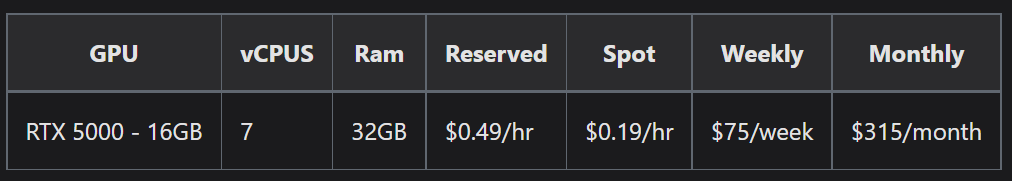

12. JarvisLabs

- Data science oriented

- Affordable pricing

- No pre-payment

- Provides Jupyter integration

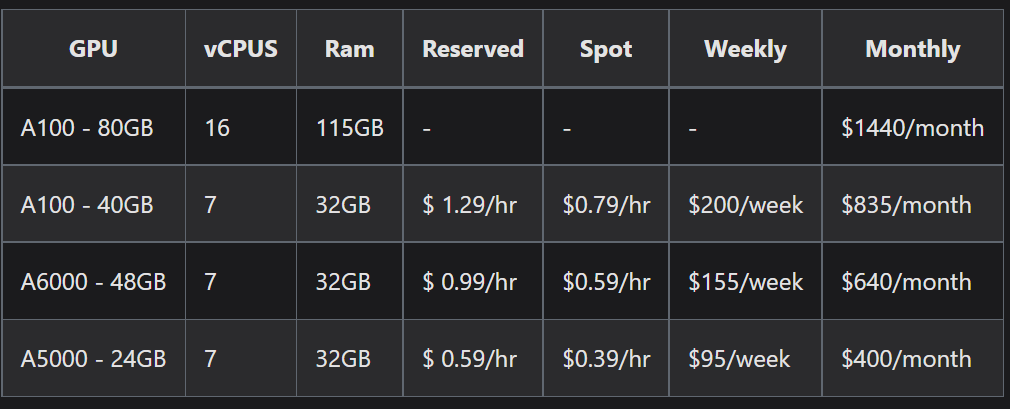

- 1.29$ per hour for standard NVIDIA A100

JarvisLabs is a competitively priced, flexible and simple-to-setup cloud GPU platform that offers a range of pricing options, GPU types and comes pre-installed with all the necessary software embedded in a Jupyter notebook within moments of spinning up the GPU.

The startup was first designed as a way for data scientists and students to acquire cloud GPUs at an affordable rate and with easy access.

An important note about JarvisLabs is the non-requirement of pre-paid GPUs.

They instead require users to access their services with a wallet system, recharging and limiting spending when the user wants. This flexibility allows students and other users to set their budget accordingly to their needs, saving them major costs.

This is ‘the’ cloud GPU for beginners.

JarvisLabs offers a standard pricing of 1.29$ per hour for their A100, 40GB GPU with 7 vCPUs and 32 GB RAM. However, this price can be reduced up to 60% via their ‘spot’ instances, which spare GPU capacity.

The standard 1.29$ for A100 becomes 0.79$ per hour with the spot instance type.

GPU Locations

JarvisLabs seems to have located their data centers exclusively in India, as was their initial intention.

Types and Pricing of GPUs

Check out the JarvisLabs pricing page.

1. Ampere

JarvisLabs offers NVIDIA A100, A6000 and A5000 GPUs with possible scalability.

2. Quadro

What Are Spot Instances?

Spot or preemptible instances are those that are not reserved. You can use discounted surplus GPU capacity to save up to 60% on costs. Since these instances will automatically pause when demand rises, you can use them for interruptible workloads. They are suitable for learning and when after you’ve put checkpoints in place and want to cut costs.

Ease of Use

JarvisLabs GPUs are easy to setup and perfectly planned for beginners and data scientists alike. All data science related pre-installations are made and a Jupyter notebook is integrated for the user, ready to fire up and use with a GPU. The interface is intuitive and easily understood. The documentation is detailed and helpful.

Additional Special Pricing

For students and social workers or those working on open-source software/tools, you are eligible to apply for discounted special pricing by filling out this form.

Remember to create an account before submitting the form.

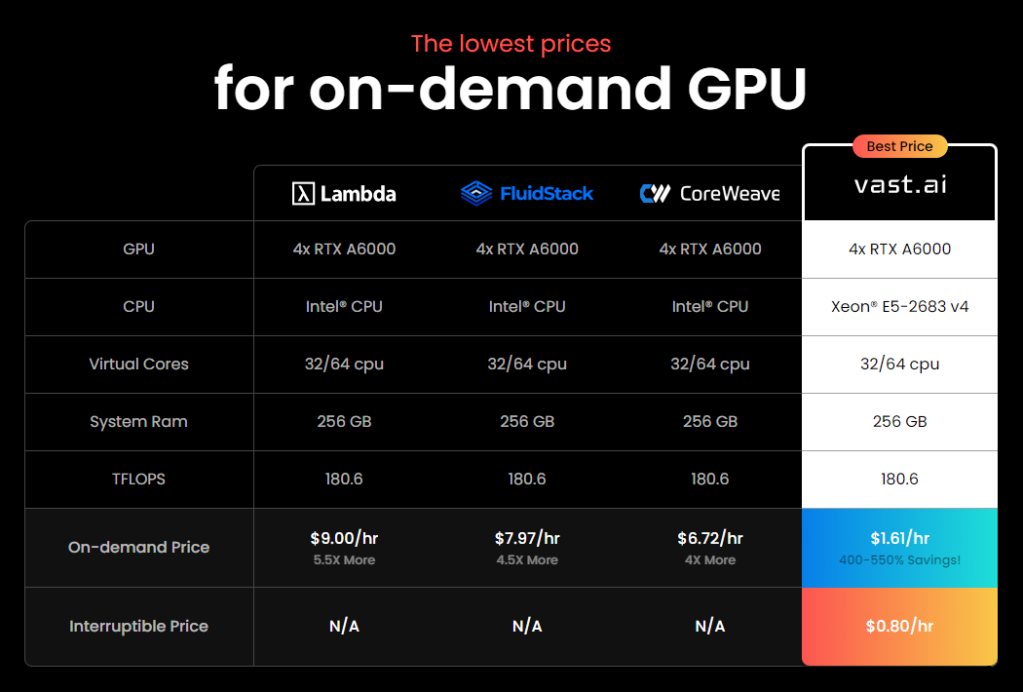

13. Vast AI

- On-demand pricing

- GPUs rented by other users

- Various types of GPUs at affordable rates

- Innovative hosting system

- 1.61$ per hour for RTX A6000

- Linux-based images

- Docker for AI assistance (includes Jupyter)

A global rental market for low-cost GPUs for high-performance compute exists at Vast AI.

By enabling hosts to rent out their GPU hardware, they reduce the cost of processing-intensive workloads. Clients may then utilize their web search interface to locate the cheapest prices for computing in accordance with their needs, run commands, or open SSH sessions.

They feature a straightforward user interface and offer command-only instances, SSH instances, and Jupyter instances with the Jupyter GUI. Additionally, they offer a deep learning performance function (DLPerf) that anticipates how well a deep learning task will perform.

GPU Locations

Because Vast AI relies entirely on data providers and individual GPU hosts, there are no fixed locations.

Available GPUs and Pricing

Check out their pricing page here.

Due to their on-demand pricing, the hourly rates are cut by nearly 400-550% as shown below.

Ease of Use

Vast AI’s systems are Ubuntu-based and do not offer remote desktops. Additionally, they run on-demand instances for a specific fee specified by the host. For as long as the clients desire, these instances run. Additionally, they offer interruptible instances, where customers can set bid prices for their instance and only the highest bid is allowed to run at a time.

In addition to being extremely affordable – 20c/hour for an RTX 2080 TI on-demand, 10c for interruptible – it’s also really straightforward. One click may top off your account or acquire a GPU, and the user interface fits a lot of information into a compact space.

It’s not widely known, but the main benefit of these distributed GPU leasing services is that NVIDIA restricts the use of its consumer GPUs in datacenters, despite the fact that they have many of the same capabilities as their significantly more expensive datacenter counterparts. This explains why RTX 2080 GPUs are never seen on AWS or Azure.

Getting back to the topic of convenience, Vast AI offers Jupyter images for easy, one-click environments ready for deep learning with pre-installed libraries.

14. Runpod.io

- Affordable rates

- NVIDIA A6000 48GB costs 0.79$ per hour to run

- NVIDIA A100 80GB costs 2.09$ to run

- Bare metal GPUs available

- Community and dedicated data centers leads to dynamic GPU pricing (serverless hosting)

- Prepared for Deep Learning and Diffusion (Stable Diffusion)

- Docker contained (security)

- Jupyter image

Runpod has perhaps the cheapest GPU options available, as they boast 0.2$ per hour for a GPU integrated Jupyter instance.

If you’re looking for an affordable, ambitious start-up with frequent bonuses and flexible options, then Runpod is for you.

Runpod has GPU offerings just like any other IaaS, but they have two categories of them:

- Secure clouds: In T3/T4 data centers, Secure Cloud is operated by reliable partners. In order to minimize any downtime, the alliance offers high reliability along with scalability, security, and quick response times. Secure Cloud comes highly recommended for any sensitive and business workloads.

- Community Cloud offers strength in numbers and global diversity. They can supply peer-to-peer GPU compute, which links different compute providers to consumers, through the decentralized platform. In order to deliver compute with strong security and uptime, community cloud providers are invited exclusively and subjected to scrutiny for security.

Runpod names its instances as pods. Named RunPods.

RunPod uses tools like Docker to segregate and containerize guest workloads on a host computer. RunPod has developed a decentralized platform that enables the connection of thousands of computers to provide a seamless user experience.

There are two types of pods.

On-Demand Instance Pod

Non-interruptible workloads are appropriate for on-demand instances. As long as you have enough money to keep your pod running, you pay the on-demand pricing and are not subject to competition from other customers.

With resources allocated specifically for your pod, OnDemand pods can run without interruption for an unlimited amount of time. They are more expensive than Spot pods.

Spot Instance Pod

An interruptible instance called a spot instance can typically be rented for a lot less money than an on-demand instance. Spot instances are excellent for stateless workloads, such as APIs, or for workloads that may be saved to volume storage on a recurring basis. If your spot instance is interrupted, your volume disk is still kept.

Spot pods make use of extra computing power and let you place bids for those computing resources. Although resources are committed to your pod, another person may make a greater offer or launch an OnDemand pod that will interrupt your pod. When this occurs, your pod is allowed 5 seconds to terminate using SIGTERM and ultimately 5 seconds later, SIGKILL. In that five seconds, you can use volumes to save any data to the disk, or you can periodically push data to the cloud.

It is similar to Vast.AI in the sense of decentralized community hosted GPUs.

GPU Locations

For the community driven servers, there are no fixed servers as users around the globe host their own GPUs.

However, their ‘secure clouds’ are located in data centers that are situated in

- Kansas, USA

- Oslo, Norway

- Philadelphia (HQ)

GPU Types and Pricing

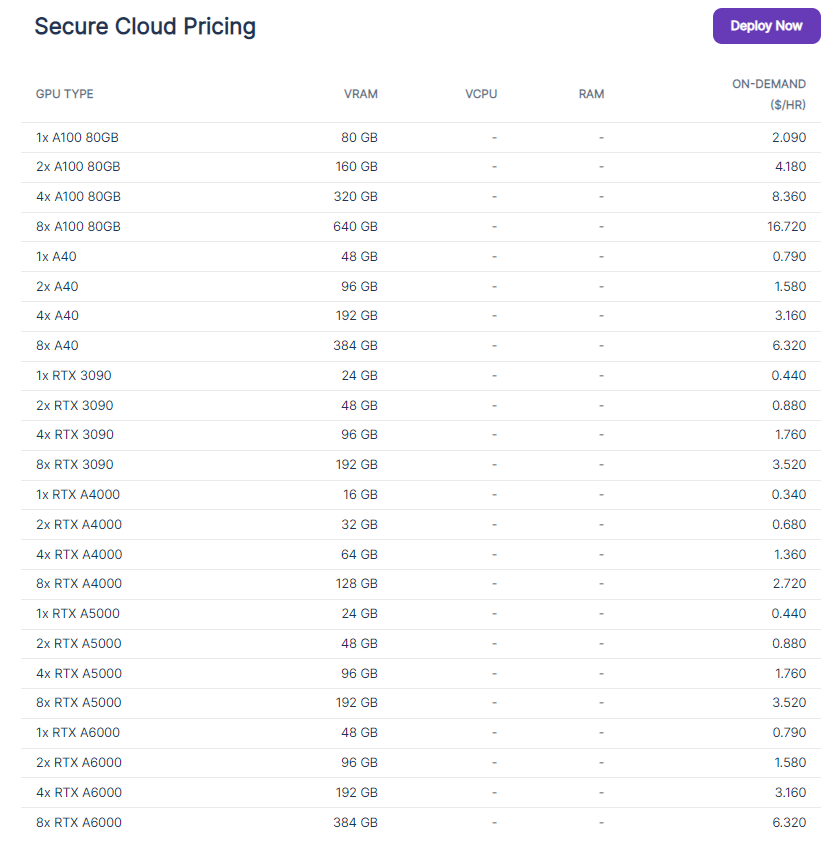

Check Runpod.io’s pricing here.

Secure Cloud pricing list is shown below:

Community Cloud pricing list is shown below:

Ease of Use

Runpod is simple to setup with pre-installed libraries such as TensowFlow and PyTorch readily available on a Jupyter instance. The convenience of community-hosted GPUs and affordable pricing are an added bonus. The user interface itself is simple and easily understood.

15. Banana.dev

- Affordable, on-demand pricing

- Serverless hosting

- Machine learning focused

- Easy setup

- Small-scale start-up

- Only NVIDIA A100s

- One-click deployments of popular ML models such as Stable Diffusion, Whisper et cetera.

Banana is a start-up located in San Francisco, with their services focusing mainly on affordable serverless A100 GPUs tailored for Machine Learning purposes.

The cloud GPU platform primarily focuses on model deployment and comes with pre-built templates for GPT-J, Dreambooth, Stable Diffusion, Galactica, BLOOM, Craiyon, Bert, CLIP and even customized models.

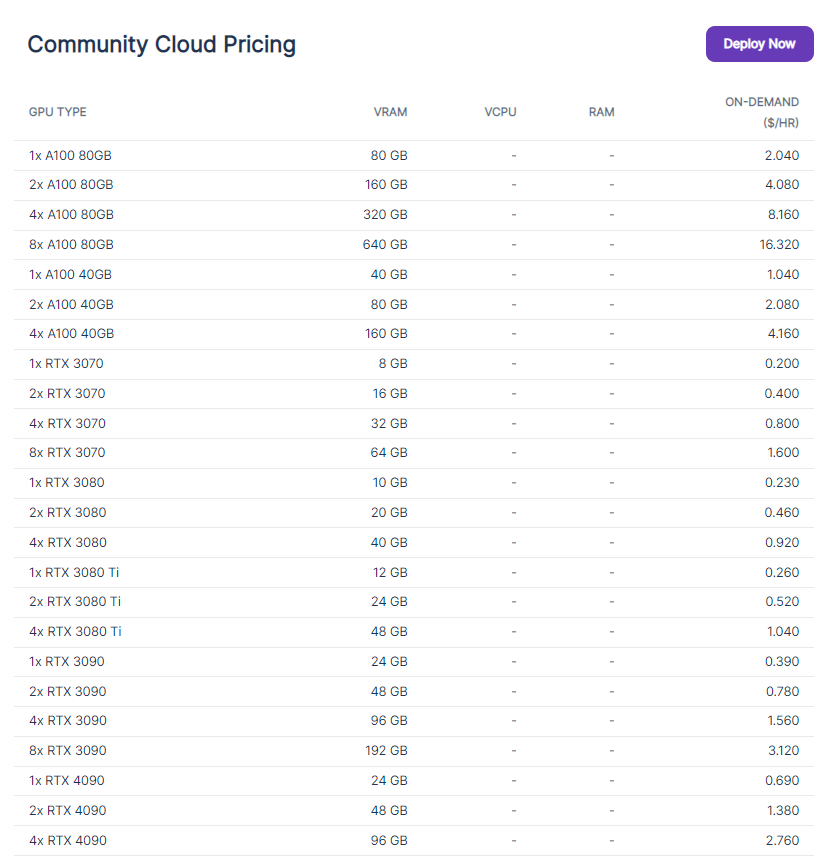

GPU Types and Pricing

Banana offers on-demand, serverless GPUs and therefore comes at quite an affordable rate compared to its competitors.

You can check the pricing page here.

Ease of Use

It is easy to get Banana GPUs spinning. The setup process is simple and the guide docs offer a quick start guide that is simple and intuitive.

The entire thing is focused on model deployment and ML, so everything comes easily, with some templates even letting you deploy with one click. You can take a look at their one-click models here.

16. Fluidstack

The Airbnb of GPU computation

- Cheap rates

- T2-T4 data centers only

- Reliable and efficient performance

- Machine Learning focused

- 24H support

- 47,000+ servers for optimized speed

- ISO 27001, 27017, 27018, 27701 and more certified

Before, Fluidstack was a marketplace for the exchange of unused bandwidth, similar to a VPN service.

However, nowadays, Fluidstack is entirely focused on the exchange of GPU usage.

They offer their services when you want to procure a cloud GPU at an affordable rate and reliable speeds/security, all around the globe, at a moment’s notice.

All of their instances are based on a Linux image.

GPU Locations

FluidStack has cloud GPU servers all over the world. You can check here.

GPU Types and Pricing

On Fluidstack, you are able to use on-demand or monthly savings plans for better economic viability. If required, you are also able to deploy a cluster of GPUs.

1. Hour

2. Monthly

Ease of Use

Fluidstack is easy to get started with, as you sign up and in a few clicks, procure a GPU that fits your needs. Depending on your needs, the pricing is also quite flexible and the tremendous amount of data centers makes for a convenient, low-latency connections.

17. IBM Cloud

- Highly optimized computing services

- Reliable security

- Databases

- 1.95$ per hour for NVIDIA Tesla P100

- Migration services

- Storage capabilities

- Highly configurable

Platform as a service (PaaS), infrastructure as a service (IaaS), and software as a service (SaaS) are all included in the IBM cloud suite of cloud computing services. IaaS cloud companies can use IBM to provide virtualized IT resources including processing power, storage, and networking online. Virtual servers and bare metal servers are also available.

The bare-metal GPU with Intel Xeon 4210, Xeon 5218, and Xeon 6248 instances is a part of its offer. Customers can run conventional or specialized, high-performance, and latency-sensitive workloads directly on the server hardware with bare-metal instances, just as they might with on-premise GPUs.

For its bare-metal server option, they also provide instances with NVIDIA T4 GPUs and Intel Xeon processors with up to 40 cores, as well as instances with NVIDIA V100 and P100 models for its virtual server alternatives.

GPU Locations

IBM Cloud has a global infrastructure as shown below. You can also check locations here.

GPU Types and Pricing

Check IBM Cloud’s pricing page here.

IBM Cloud offers a multitude of pricing structures for flexible budgeting.

Public – provides access to virtual resources in a multi-tenancy model. An organization can choose to deploy its applications in one or more than one geography.

Dedicated – A single-tenant service cloud IBM hosts in one of its data centers. An organization can connect to the environment using a direct network connection or VPN; however, IBM manages the platform.

Private – IBM platform that an organization deploys as a private cloud in its own data center behind a firewall.

The pricing can be confusing. IBM offers certain GPUs depending on the GPU server type you select:

- Bare Metal Server GPUs (Nvidia T4)

- GPUs on Virtual Servers for Virtual Private Cloud (Nvidia V100)

- GPU on Classic Virtual Servers (Nvidia P100 or V100)

Some GPU servers are available for hourly payment plans, while others are only for monthly.

For simplicity’s sake, we’ll list some of the prices here. GPU servers with NVIDIA Tesla T4 seem to be available on a monthly basis and not hourly, with bare metal servers.

| GPU Instance | GPU Allocations | vCPU | Memory | On-Demand Price |

| Tesla P100 | 1 | 8 cores | 60 GB | $1.95/hr |

| Tesla V100 | 1 | 8 cores | 20 GB | $3.06/hr |

| Tesla V100 | 1 | 8 cores | 64 GB | $2.49/hr |

| Tesla V100 | 2 | 16 cores | 128 GB | $4.99/hr |

| Tesla V100 | 2 | 32 cores | 256 GB | $5.98/hr |

| Tesla V100 | 1 | 8 cores | 60 GB | $2,233/month |

Ease of Use

IBM is quite robust with its features and extremely reliable as one of the industry’s leading cloud GPU and other IT services providers. Due to its global spread-out servers and intuitive user interface, the service is convenient and quick. To take a look at how to set up a GPU instance, you should visit this guide.

18. Brev.dev

- API

- Variety of use cases

- Multiple AI model templates

- NVIDIA V100 TPU costs 3.67$ per hour

- Specialized work environments

- Simple user interface

Brev is a multi-faceted start-up oriented towards data science and biotech, both for individuals and teams but especially for software engineers looking for a virtual environment that is ready to be utilized for their specific purposes.

Additionally, their AI model templates featuring the same models as those in Banana.dev (see above), are easily deployable interchangeably.

GPU Pricing and Types

Check out their pricing page here: https://brev.dev/pricing

| GPU Model | vCPU | RAM | vRAM | Hourly | Spot * |

|---|---|---|---|---|---|

| 1 x AMD V520 | – | – | 8 | $0.45 | $0.14 |

| 1 x Nvidia T4 | – | – | 16 | $0.63 | $0.20 |

| 1 x Nvidia K520 | – | – | 8 | $0.78 | $0.24 |

| 1 x Nvidia M60 | – | – | 8 | $0.90 | $0.30 |

| 1 x Nvidia K80 | – | – | 12 | $1.08 | $0.38 |

| 1 x Nvidia A10G | – | – | 24 | $1.21 | $0.37 |

| 2 x AMD V520 | – | – | 16 | $2.08 | $0.63 |

| 2 x Nvidia M60 | – | – | 16 | $2.74 | $0.83 |

| 2x Nvidia K520 | – | – | 16 | $3.12 | – |

| 1 x Nvidia V100 | – | – | 16 | $3.67 | $1.11 |

| 4 x AMD V520 | – | – | 32 | $4.16 | $1.25 |

| 4 x Nvidia T4 | – | – | 64 | $4.69 | $1.53 |

| 4 x Nvidia M60 | – | – | 32 | $5.47 | $1.65 |

| 4 x Nvidia A10G | – | – | 96 | $8.81 | $2.05 |

| 8 x Nvidia K80 | – | – | 96 | $8.64 | $2.60 |

| 16 x Nvidia K80 | – | – | 192 | $17.28 | $5.19 |

| 8 x Nvidia A10G | – | – | 192 | $19.55 | $5.87 |

| 8 x Nvidia A100 | – | – | 320 | $39.33 | $11.80 |

Ease of Use

Due to their easily deployable models and specialized environments, it is rather convenient for individuals or teams looking for a pre-built environment.

Conclusion

If you are a beginner looking for a jump-started, no technicalities involved Python environment, head on over to:

- Kaggle

- Google Colab

- Paperspace

If you are an experienced professional who’s looking for a fitting cloud GPU platform for your deep learning tasks, head on over to:

- AWS EC2

- Azure

- GCP

- IBM Cloud

- Tencent Cloud

- Genesis Cloud

- OVH Cloud

- Linode

If you are looking for alternatives with specialized solutions and specific pricing, head on over to:

- Vultr

- Runpod

- Banana

- CoreWeave

- Lambda Labs

- Jarvis Labs

- Vast.AI